Data Mining Algorithms

What is Data Mining Algorithm?

A data mining algorithm can be understood as a set of heuristics and calculations that are used for creating a model from a data. There are various data mining algorithms as algorithms are very popular, helpful and extensively used in various industries and businesses in different processes.

Why Algorithms are used in Data Mining?

There are many reasons why algorithms are used in Data Mining. The list below comprises of a few reasons why usage of algorithms in Data Mining is important.

- For Big Data, this is because of the reason that big data needs to be diverse, fast changing and unstructured. The algorithms help the user achieve all these factors.

- For Facebook, as there a lot of terabytes (around 600 TB) of new data on this social networking site every day.

- To meet various other challenges like organizing and categorizing some heavy data.

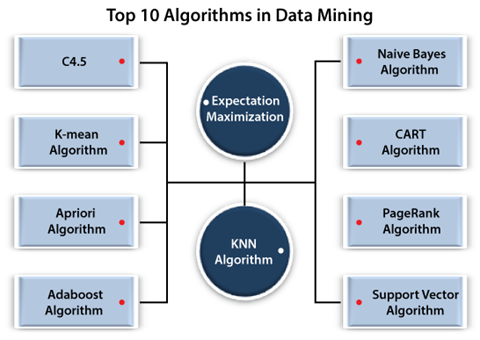

Top 10 Algorithms in Data Mining

The list below comprises of top 10 data mining algorithms that are commonly used in data mining:

C4.5:

The C4.5 algorithm is basically used for Data Mining as a Decision Tree Classifier which can be further used to obtain a decision on the basis of a certain sample of a data. This algorithm is very popular amongst the users in data mining.

Before, diving deeper into what C4.5 is, there is term classifier which was used above. This term refers to a data mining tool which before anything else, draws the data that needs to be classified and then predicts that class of the new data.

C4.5 is advantageous over other data mining algorithms such as decision tree systems. This is because of a number of reasons like C4.5 algorithms are able to work on both type of data that are discrete and continuous.

Nonetheless, one should also pay attention to the fact that C4.5 cannot be regarded as the best algorithm. At the same time, it can be proven beneficial to the users and in some of the cases also.

K – means Algorithm:

K- means algorithm is one of the simplest algorithms to learn. It is very efficient method for solving problems that arises in the process of clustering. This algorithm is an iterative algorithm that partitions the data set into K (which is also clusters). The K- means algorithm works in the following steps:

- First step is to classify the number of clusters I.e., K.

- Initializing the centroids (which refers to the data point at the center of the clusters). This could be done by mixing the Data Set and selecting K randomly for the centroids without the replacement)

- In the final step, the user will have to keep iterating until there is no change to the centroid (which means assignment of data points to clusters is not changing).

Apriori Algorithm:

Apriori Algorithm works by the learning of association rules. Association rules can be defined as a technique in data mining that is used for establishing correlations between the variables in database.

The name “Apriori” is given to it because it requires prior knowledge of frequent itemset properties. In this algorithm, a property called Apriori property is used for improving the efficiency of level- wise generation of frequent item sets. The apriori property is “All subsets of a frequent itemset must be frequent”.

Usually, the algorithm is used on a database that contains a large number of transactions, the example for this could be the items that are bought from a grocery store.

There are two terms which are very commonly used in this algorithm. Those terms are:

- Support: this means the frequency of occurrence.

- Confidence: this is a conditional probability.

Apriori Algorithm is simple and easy to understand. Nonetheless, it also has some limitations. The main limitation is that it requires many Database scans and in addition to that, it is very slow also.

Adaboost Algorithm:

Adaboost Algorithm is an abbreviation for “adaptive boosting”. This algorithm basically works by constructing a classifier. This classifier is a data mining tool that draws data and predicts the class of the data on the basis of the inputs.

Boosting is a learning algorithm that draws multiple learning algorithms such as decision trees and merges them together. This is a supervised learning algorithm. Adaboost algorithm is simple and it is very easy to understand. Additionally, this algorithm is also quicker. There are a ton of implementations of Adaboost in real – world.

- It is used for predicting customer churn.

- It is used for classifying the types of topics the clients are talking about.

- It is also used for solving classification problems in languages such as R and Python.

Although, this algorithm was developed in 1996, it is still extensively used in various industries and businesses.

Naïve Bayes Algorithm:

Naïve Bayes Algorithm is a group of classification algorithms. It is regarded as a supervised learning algorithm. It is not a single algorithm. But it is a collection of algorithms where the cause or aim is the same I.e., every pair of features being classified is independent of each other.

This algorithm, as the name suggest, is based on Bayes theorem. The Bayes theorem helps in finding the probability of an event occurring given the probability of another event has already occurred.

which implies- P (A|B) = P (B|A). P(A)

P(B)

Where A and B are the events.

The Naïve Bayes Algorithm works on the assumption of independence among the predictors. The main Naïve Bayes Algorithm is quick, highly scalable and easy to implement algorithm.

At the same time, the main limitation is that assumption because in the real- world scenario independence among the predictors is never true.

Classification and Regression Trees algorithm

CART is shortening of classification and regression trees. It is also one of the most popularly used algorithms in data mining. The main objective/aim of this algorithm is to create a model that predicts the value of a dependent variable on the basis of the inputs or the values.

This algorithm was developed for the intention of giving a name to the types of decision trees which are given below:

- Classification trees: The context of this term refers to the dependent variable that is categorical and the tree predicts the class.

- Regression trees: The context of this term refers to the target variable that is continuous and tree is used to predict the value.

The model of Classification and Regression trees is considered predictive due to the reason that it is likely to explain the outcome of a value of a variable in this model.

PageRank Algorithm

This algorithm was published by Sergey Brin and Larry Page. The two of them are also the creators or developers of the most popular search engine Google. This algorithm also comes under this search engine. This algorithm is used to reach the importance of some objects which are linked with the network of objects using another algorithm called link analysis algorithm.

The link analysis algorithm is a type of network analysis that is used for exploring the various links among the different objects.

The most common example of the PageRank algorithm is Search Engine of Google.

Since PageRank was mainly created for the World Wide Web. The few applications of this algorithm are given below:

- In order to determine which species are critical so as to sustain the ecosystem.

- In twitter, the feature Who to Follow was developed for the purpose of easily finding people to follow in Twitter.

This algorithm is an unsupervised learning algorithm due to its usage in discovering the relevance of a web page.

Support Vector Machines

At high level, the SVM or the Support Vector Machines are very much similar to C4.5 algorithm. But the Support Vector Machines do not use any decision trees. The support vector machines learn a hyperplane so as to classify the data into two different classes.

A hyperplane can be defined as decision boundaries that are used to classify the data points. The Data points that drop on any side of the hyperplane can be allotted or assigned to different classes.

This algorithm is a supervised learning algorithm as the data set has to teach the support vector machines about classes and then only the SVM or the support vector machines can classify the new data.

The implementations of the SVM or Support Vector Machines are various which are given below:

- Scikit- learn: Scikit- learn is a free software machine learning library that is used in Python programming language. It contains several classification, regression and clustering algorithms.

- MATLAB: MATLAB is a high-performance language. It is not freely available but it is a very easy to learn language.

- LIBSVM: LIBSVM is a commonly used and open- source machine learning libraries, which was developed at the National Taiwan University. It is written in C++ language.

Expectation Maximization

Expectation Maximization or EM is usually used as a clustering algorithm just like K- means. This is used for the objective of knowledge discovery. Expectation Maximization is very helpful with clustering. It starts by taking model parameters followed by three steps iterative process which are:

- E- Step: Calculate the probability for assignments of each data point to a cluster.

- M- Step: Updates the model parameters with the help of cluster assignments from the previous step.

- Repeat: Repeat the steps prior to the convergence of parameters and cluster assignments.

This is an unsupervised learning approach because it does not provide the class information. It has various implementations, such as:

- Weka: Waikato Environment for Knowledge Analysis was developed at the University of Waikato in New Zealand. It is a free software which has a collection of machine learning algorithms for various data mining tasks.

- Scikit- learn: Scikit- learn is a free software machine learning library. It is used in Python programming language.

K-Nearest Neighbors:

K- Nearest Neighbors or also known as KNN is an algorithm that is used for the objective of finding the similarity between the one data set and the other.

It is a supervised learning algorithm because K- nearest neighbor is given a labelled training data set.

The K- nearest neighbors has a number of properties, such as:

- Lazy learning algorithm: KNN is a lazy learning algorithm because of the reason that it does not have a specialized training phase and uses all the data for training while classification.

- Non-parametric learning algorithm: KNN is also a non-parametric learning algorithm. This is due to the reason that it does not assume anything about the underlying data.

KNN or K- nearest neighbor has various applications; some of them are given below:

a. Banking System: KNN can be used in banking system to predict whether a person covers all the requirements for loan approval.

b. Calculating Credit Ratings: KNN algorithms can also be used for finding a person’s credit rating.