Data Pre-processing in Data Mining

What is the meaning of Data Pre- processing?

Data Pre- processing is a very important or crucial phase in Data Mining. However, it is often neglected which should never be done.

The process of Data Pre- processing can be defined as a technique in which the raw data or the low- level data is from a set of data is transformed into an easy to understand and comprehensible form of data. It is a very beneficial step in Data Mining.

The Raw data is usually found incomplete and incompatible, due to which there are some increased chances of error and misinterpretation.

This is when the Data Pre- processing comes into play. It is a very efficient and proven method of resolving these kinds of errors.

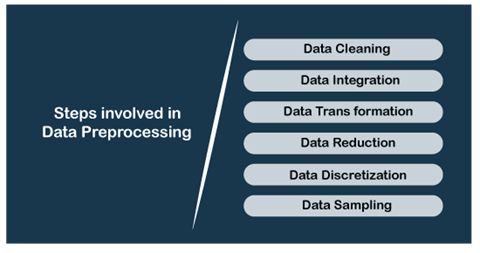

Various Steps involved in Data Pre- processing:

Listed below are the steps that are involved in Data Pre- processing:

Data Cleaning:

The first and foremost step is involved in the data pre- processing is data cleaning. As it is evident from the above section the data that is given is raw and it needs to have no irrelevant or missing parts. For that purpose, data cleaning is used.

There are some data cleaning routines through which the data should run. These routines are described below:

- Missing Values: Sometimes, there is a situation where the data in a set of data is missing. For that, there are a couple of solutions that are listed below:

- Ignore the tuples: This method is regarded as not a very effective method; this only comes to use when the tuple has several attributes is having missing values.

- Fill the missing value: This approach is also not very effective and feasible. Moreover, it is a very time-consuming approach. The basic idea covering this approach is that a user has to fill the missing values. This can be done in three ways, one is doing manually, second is by using attribute mean and the third is by using the most probable value.

- Noisy Data: Noise can be defined as a random error or variance that is found in the measured variable. It is usually caused when there is a faulty data collection, data entry error, and others. This can be handled in various ways that are listed below:

- Binning Method: In this method, the sorted data is smoothed with the help of values around it. The data can be divided into segments of equal size and then the different methods are applied so as to complete a certain task. The data in a segment can be replaced by using the mean or boundary values in order to complete the given task.

- Regression: The data is made smooth with the help of using a regression function. The regression can be linear or multiple. Linear regression means the regression that has only one independent variable. On the other hand, Multiple regression has various independent variables.

- Clustering: This approach mainly groups the data in a cluster. In this approach, outliers are detected with the help of clustering. Here, similar values are arranged into a “group” or a “cluster”.

Data Integration:

In this, the data is combined from different sources into a coherent data store, as in data warehousing. Moreover, in this the conflicts are resolved.

Data Transformation:

In this step, the data given is transformed into understandable and appropriate form. There are some steps involved in data transformation. Those steps are given below:

- Normalization: In this, the data values are scaled in a specific range for example, -1.0 to 1.0, 0.0 to 1.0 and so on. This process makes sure that there is no redundant data.

- Smoothing: It is used to clean out the noise from the given data. This process has various techniques such as binning, clustering, and regression.

- Aggregation: In the process of aggregation, summary or aggregation operations are performed on the given set of data.

- Generalization of the data: In this process, the raw data or low-level data are replaced by higher level concepts with the help of using concept hierarchies.

Data Reduction:

As it has already been established that, data mining is a technique which helps the expert to handle the large amount of data. After working with large volume of data, analysis is harder in such cases. The basic aim is to increase the storage efficiency and subsequently reduce data storage and analysis costs.

The steps involved in the process of data reduction are the following:

- Data Cube Aggregation:

Aggregation operation is put on to data in order to construct of the data cube. - Attribute Subset Selection:

It is important that the highly relevant attributes have to be used, and the remaining will be discarded. In order to perform attribute selection, one can use level of significance and p- value of the attribute. - Numerosity Reduction:

Numerosity Reduction authorizes to store the model of data in place of the whole data, the example could be- Regression Models. - Dimensionality Reduction:

It is used to reduce the size of data by encoding mechanisms. When reconstruction from compressed data is performed, the original data can be retrieved, this kind of reduction is also called lossless reduction. There are two effective methods of dimensionality reduction namely, Wavelet transforms and PCA (Principal Component Analysis).

Data Discretization:

Data Discretization is a process that is used in transforming continuous data attribute values to a certain finite set of intervals. In the process, there is a reduction in number of values of a continuous attribute. This is done by dividing the range of attribute intervals.

Data Sampling:

This is a technique which is used to select and work with the subset of the data set. It is possible because the subset has the similar properties of the original one.