Gradient Descent

Gradient Descent

When training a neural network, an algorithm is used to minimize the loss. This algorithm is called as Gradient Descent. And loss refers to the incorrect outputs given by the hypothesis function.

The Gradient is like a slope, which gives the direction of the movement of the loss function, and through that slope, we can figure out the direction in which the values of the weights should move to minimize the amount of loss. I.e., to minimize the incorrect outputs.

In order to train the neural network, we will start by choosing the weights randomly, and with the help of inputs, we will figure out the actual values of the weights and minimize the loss.

So to train the neural network following steps are followed:

- Start with random values of weights.

- Repeat

- Calculate the Gradient based on all the data points. It will give the direction that will lead to decreasing loss.

- Update the values of the weight according to the Gradient.

Now, Training the neural network with the help of all the data points and adjusting the weights a little bit at a time and eventually, a good solution will be achieved at which loss will be minimum. But this process can take a lot of time if the data is huge, and calculating the gradient-based on all the data points and then again changing the value of weights and again calculating Gradient and repeating this process until the perfect solution is achieved real time-consuming.

So, to overcome this problem, there is one method called Stochastic Gradient Descent.

Stochastic Gradient Descent- In Stochastic Gradient Descent, Gradient is calculated based on one data point.

So, training of the neural network starts from choosing the weights randomly and then calculating Gradient based on only one data point. The same process with different values of weights and different data points is repeated until the perfect solution is calculated.

So, the steps followed are:

- Start with random values of weights.

- Repeat

- Calculate the Gradient based on one data point. It will give the direction that will lead to decreasing loss.

- Update the values of the weight according to the Gradient.

With this method, the final solution can be less accurate, but the time taken to train the model using this method will be less than the Standard Gradient Descent.

There’s also one more method called mini-batch Gradient Descent.

Mini-Batch Gradient Descent – In this method, the gradient is calculated based on the batches of the data.

So, the neural network training starts from choosing the weights randomly and then calculating Gradient based on the group of data points. The same process with different values of weights and different groups of data points is repeated until the perfect solution is calculated.

So, the steps followed are:

- Start with random values of weights.

- Repeat

- Calculate the Gradient on the basis of one small batch of data points. It will give the direction that will lead to decreasing loss.

- Update the values of the weight according to the Gradient.

In this method, the size of the batch can be decided accordingly. So, this method gives a more accurate solution and time consumed and is also less than the Standard Gradient Descent as less computation is required.

With the help of gradient descent, many problems can be solved. Till now, we have seen problems with multiple inputs and one Output. But we can train a neural network to estimate multiple outputs as well with the help of Gradient Descent.

These methods allow us to do Multi-class classification as well as Binary Classification.

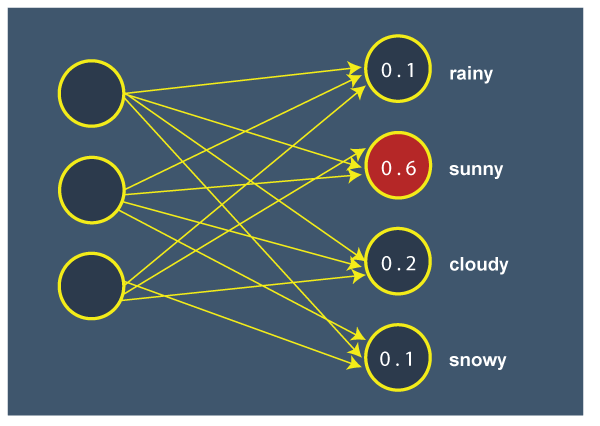

For Example, to predict the Weather (Rainy, Sunny, Cloudy, Snowy).

Here, all the inputs on the left have some values, and each edge has some weights assigned to them so, after computation, different probabilities of each event can be calculated. For Example, there are 10% chances of Rain, 60% chances of Sunny, 20% chances of Cloudy, 10% chances of Snowy. And the one with the highest probability is the Output. This was the Example of a supervised Machine Learning problem. But neural networks have other applications as well like these methods can be used in reinforcement learning.

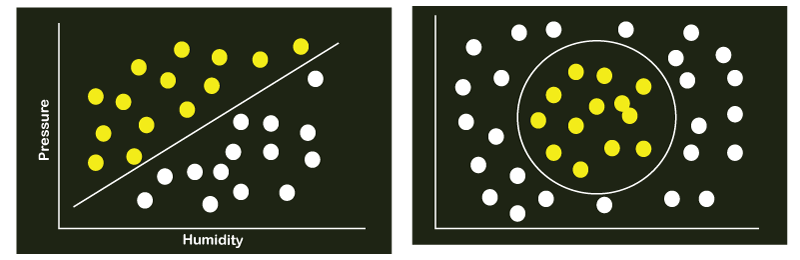

The limitation of this approach is that these methods can only work if the data is linearly separable because a linear combination of inputs is used to define some outputs and threshold values.

For Example, we have a binary classification, and white data points represent '0,' and yellow data points represent '1'. In the left figure, we can see that white and yellow dots are easily separable by a linear line and in the right figure, we can see that there is a complex decision boundary and points cannot be separated by a linear line. However, the data we deal with in the real world is not linearly separable. In these cases, Multilayer Neural Networks are used to model the data non-linearly.

Multilayer Neural Network

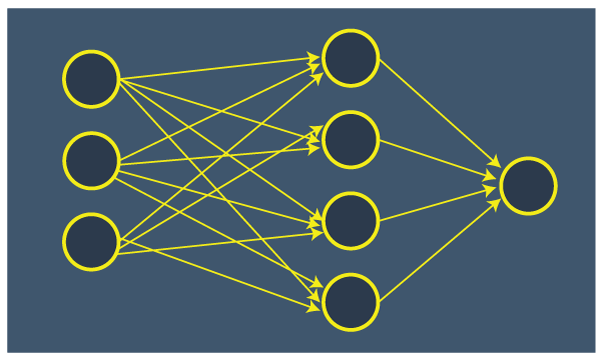

Multilayer Neural Network is the artificial neural network that has an input layer, an output layer and at least one hidden layer in between. Multilayer Neural Network looks like in the figure below:

The number of nodes in the hidden layer and the number of hidden layers can be decided accordingly.

Each node in the hidden layer calculates its output value based on the linear relationship with inputs, and again, Output will be calculated based on the output values of the nodes in the hidden layers. Each node's output value will be multiplied by its respective weight, and then the final Output will be calculated.

This method has the ability to calculate the more complex functions, like instead of having a single linear decision boundary, each node in the hidden layer can learn a different decision boundary, and we can combine those decision boundaries to figure out the final Output. Each node in the hidden layer learn some useful feature of each input, and all those learnings are combined to calculate the final Output.

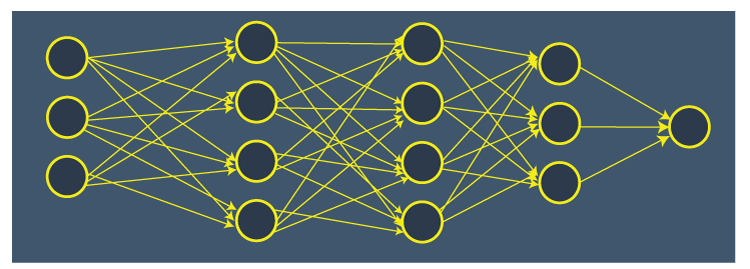

Now, the question arises of how we train this model. As the data, we are always given have the values of input data and output data, but we don't have values of the nodes in the hidden layer. To solve this problem, there's a new algorithm called Backpropagation.

Backpropagation.

Backpropagation is the algorithm which is used to train the neural networks with multiple hidden layers.

As we know while training the Multilayer neural network we have input and output values and we need to calculate the values of each node in all the hidden layers to train the network properly. And, Backpropagation algorithm calculate these values by propagating backwards. This algorithm first calculates the overall loss i.e. amount of incorrect output given by the hypothesis function on the basis of given input and output values. And, then it starts moving backward and calculates the loss value of each node in the hidden layer based on the overall loss and values of the weights. And then it calculates the final value of all the nodes.

Steps followed are:

- Start with the random values of weights.

- Repeat

- Firstly the error in the output layer is calculated.

- For every layer, starting from the output layer moving back inward towards the first hidden layer from the output layer.

- Calculate the error in the back layer based on the values of weights.

- Then, Update the weights.

Backpropagation algorithm is the key algorithm through which multilayer neural networks are trained based on the values of weights and create a function to minimize the loss.

This algorithm works fine for the networks with multiple hidden layers, and a network with multiple hidden layers is called the Deep Neural Network.