Node.js Streams

An Introduction to the Streams

Streams are one of the elemental concepts that are introduced to power the applications based on Node.js. These are the method used to handle the data and to read or write input into output consecutively.

These methods are used to manage reading and writing files, network communications, or any end-to-end data exchange in a well-organized manner.

So, what makes the Streams so unique? Streams read chunks of data into pieces, execute its data without storing it all in memory; instead of following the traditional method in which a program reads a file into memory all at once.

This method makes the streams pretty powerful when handling a large amount of data. For example, if a file's size is larger than the free space in memory, it is impossible to read the complete file into the memory to execute it. That's where streams come into action!

Let's understand the working of streams with an example. Most of us are aware of the 'streaming' services such as YouTube, Amazon Prime or Netflix. Instead of allowing us to download the video and audio feed all at once, these services provide the browser to receive the video as continuous chunks flow, allowing the recipients to begin watching or listening almost instantly.

However, that doesn't mean that the streams only work with big data or media. They also provide users with the strength of 'composability' in their codes. Designing with keeping composability in mind means that we are combining the several components in a certain manner to produce the same type of output. Using streams in Node.js makes it feasible to write impressive code pieces by piping data to and from other minor code pieces.

Major Advantages of Streams

Comparing with other methods used in data handling, Streams provide two major advantages:

- Time Efficiency: It takes considerably less time to begin processing data as soon as we have it, rather than waiting with processing until the complete payload has been conveyed.

- Memory Efficiency: We don't require to pile up large amounts of data in the memory before processing it.

Node.js API powered by Streams.

Because of the stream's advantages, many Node.js Built-in modules offer intuitive stream handling capabilities. Some of them are listed below:

- The fs.createReadStream() method is used to create a readable stream to a file.

- The fs.createWriteStream() method is used to create a writable stream to a file.

- The http.request() method gives back a writable stream in the form of an instance of the http.ClientRequest class.

- The net.connect() method is used to initiate a connection based on the stream.

- The process.stdin method gives back a stream connected to stdin.

- The process.stdout method gives back a stream connected to stdout.

- The process.stderr method gives back a stream connected to stderr.

- The zlib.createGzip() method is used to compress the data into a stream using a compression algorithm, namely, gzip.

- The zlib.createGunzip() method is used to decompress a gzip stream.

- The zlib.createDeflate() method is used to compress data into a stream using a compression algorithm, namely, deflate.

- The zlib.createInflate() method is used to decompress a deflate stream.

Types of Streams

The Streams in Node.js are classified into four categories:

- Readable Streams: Streams that allow the users to read the data are known as Readable Streams. For example: To read a file's content, we can use the fs.createReadStream() method.

- Writable Streams: Streams that allow the users to write the data are known as Writable Streams. For example, We can use the fs.createWriteStream() method to write the data to a file using streams.

- Duplex Streams: Streams that allow the users to read and write data are known as Duplex Streams. For example, net.Socket().

- Transform Streams: Streams that allow the users to modify or transform the data as written and read are known as Transform Streams. For example, we can write compressed data and read unzipped data to and from a document or file in file-compression.

Since we have already worked a lot with Node.js, several times we have come across to streams. For example, when we create a web server in Node.js, we request a readable stream and response as a writable stream. We have also used the fs module, which allow us to work with writable and readable file streams. Moreover, Express also uses streams to work together with the client. Every database connection driver that we can work with also uses streams because of TLS stack, TCP sockets, and other connections based on Node.js streams.

Creating a Readable Stream

We are required to import the stream module to create a readable stream and initialize the base ‘Readable’ object:

const Stream = require('stream');

const readableStream = new Stream.Readable();

Now, we have to implement the _read() method:

readableStream._read = () => {}

We can also implement _read() with the help of the read method:

const readableStream = new Stream.Readable({

read() {}

});

Since the stream is set, we can easily send data to it:

readableStream.push('Hello!');

readableStream.push('Greetings!');

Creating a Writable Stream

To create a Writable Stream, we are required to initialize the base ‘Writable’ object:

const Stream = require('stream')

const writableStream = new Stream.Writable()

Now, we have to implement the _write() method:

writableStream._write = (chunk, encoding, next) => {

console.log(chunk.toString());

next();

};

Now, we can pipe a readable stream in:

process.stdin.pipe(writableStream)

Getting data from a Readable Stream

To understand the concept of reading data from a readable stream, we can use a writable stream as given below:

const Stream = require('stream');

const readableStream = new Stream.Readable({

read() {}

});

const writableStream = new Stream.Writable();

writableStream._write = (chunk, encoding, next) => {

console.log(chunk.toString());

next();

};

readableStream.pipe(writableStream);

readableStream.push('Hello!');

readableStream.push('Greetings!');

The above snippet of code will produce an output, as shown below:

Hello! Greetings!

However, there is another direct method available to consume a readable stream. This method uses the readable event as given below:

const fs = require('fs');

const readableStream = fs.createReadStream('newfile.txt');

readableStream.on('readable', () => {

console.log(`Readable: ${readableStream.read()}`);

});

readableStream.on('end', () => {

console.log('End');

});

The above snippet of code will produce an output, as shown below:

Readable: Welcome to TUTORIALANDEXAMPLE.com Readable: null End

Sending data to a Writable Stream

We can use the write() method to write data to a writable stream:

writableStream.write('Welcome to tutorialandexample.com\n');

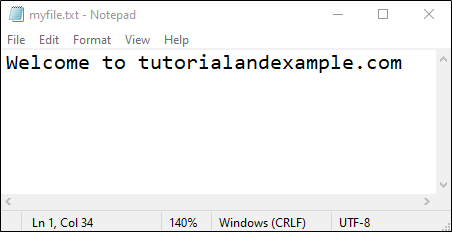

Sending a signal to a Writable Stream to end writing

We can send a signal to a Writable Stream that we have ended writing using the end() method:

// Write 'Welcome to ' and then end with 'tutorialandexample.com'.

const fs = require('fs');

const file = fs.createWriteStream('myfile.txt');

file.write('Welcome to ');

file.end('tutorialandexample.com');

// Now, no more writing is allowed!

The above snippet of code would create a new file named ‘myfile.txt’ and enter the input text. The output of the same will look as given below: