Deep learning Tutorial

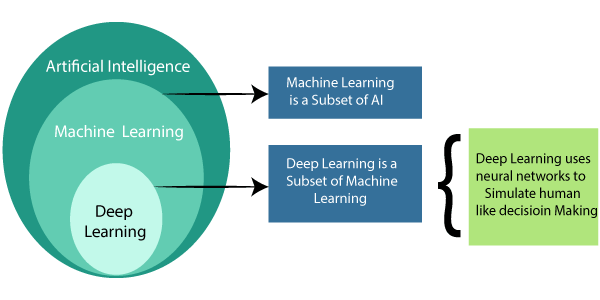

"Deep learning is a part of the machine learning methods based on the artificial neural network." It is a key technology behind the driverless cars and enables them to recognize the stop sign. Deep learning is achieving the results that were not possible before. The computer model learns to perform classification tasks directly from images, text, and sound with the help of deep learning.

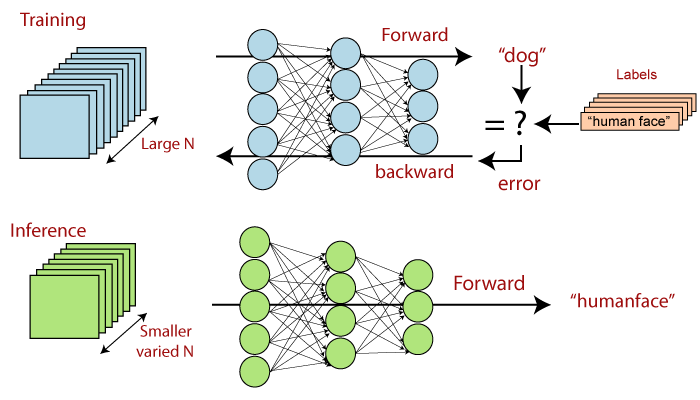

Figure: Diagram of deep learning.

The models of deep learning can achieve the state –of- art accuracy and exceed the human-level performance. These models of deep learning are trained by using the largest set of labeled data and neural network architectures that contain many layers.

There are different types of algorithms that exist in deep learning. These algorithms run data through several "layers" of the neural network algorithm. Deep learning requires a large amount of data to learn. There are various fields of Deep learning, such as Automated driving, Aerospace, and Defense, Medical research, Industrial automation, Electronics, etc. The deep learning is called deep neural learning or deep neural network.

The deep learning is the structured or hierarchical learning element of machine learning. This learning can be supervised, unsupervised, or semi-supervised. The deep learning is the special approach to building and training of the neural network.

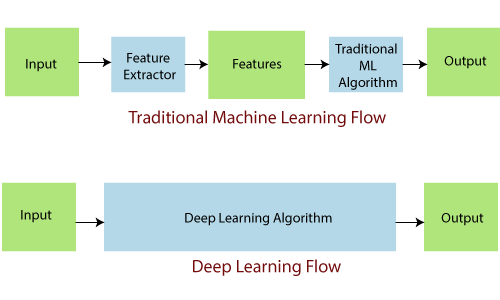

The feature extraction is also one of the aspects of deep learning. It uses the algorithm to automatically create the meaningful “features” with the help of data. The training, learning, and understanding of a model is the purpose of feature extraction. The Data scientist or the programmers are responsible for the feature extraction in any model of deep learning.

History

In 1943, "Walter Pitts and Warren McCulloch" created the computer model based “human brain” with the help of the neural network. They used the combination of algorithms and mathematics in that model. The thought process of that artificial model is known as “threshold logic” according to the developers.

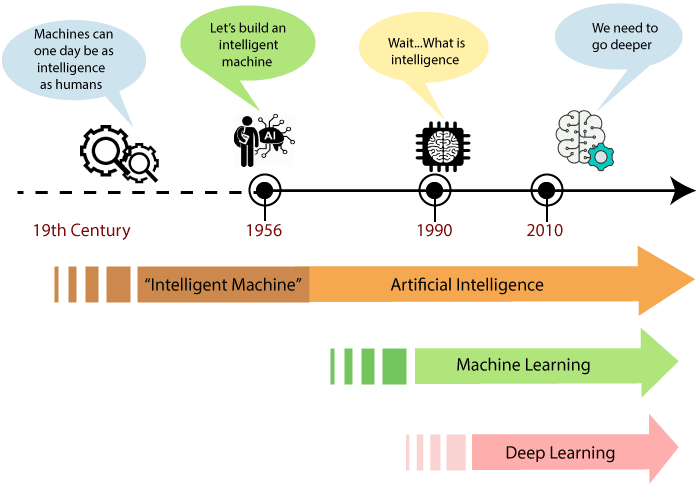

Figure: The history of Deep learning.

The first “convolution neural network “used by Kinihiko Fukushima. He designed the neural network with multiple pooling and convolutional layers. In 1979, he developed an artificial neural network which is known as Neocognitron. These neural networks used a hierarchical and multi-layered design. This design of neural network allowed the computer to learn and recognize visual patterns.

The framework of Deep learning

The deep learning framework is an interface, a library, or tool which allows the user to build deep learning models. We can create models easily and quickly without getting the details or information underlying the algorithms. It provides a clear and short way for defining the model by using a collection of the prebuilt and optimized component. The most popular deep learning frameworks are Gmail, Uber, Airbnb, Nvidia, TensorFlow, etc.

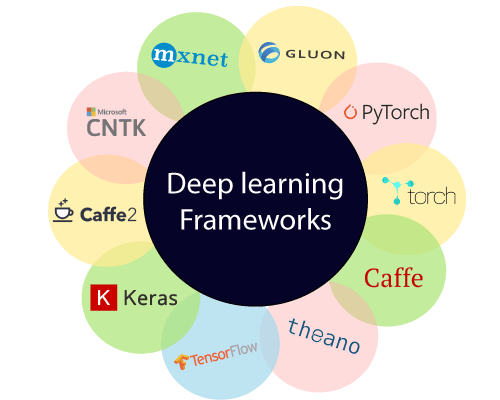

Figure: Framework of Deep learning.

We can build any type of model quickly with the help of a suitable framework, instead of writing a hundred lines of code. There are some key features of a good deep learning framework, which are given below:

- Optimized performance.

- Easy to understand and trouble-free to write the code.

- High-quality community support to work with a framework of deep learning.

- Parallelized the processes to decrease computations.

- Automatically calculate the gradients.

The framework of deep learning offers building blocks for designing, training, and validates the deep neural network through a high-level programming interface. There are several types of Frameworks used in deep learning which are given below:

TensorFlow

TensorFlow is an open-source software collection for the numerical computation by using the data flow chart. It is developed by the engineers and researchers of "Google Brain Team." TheTensorFlow is so popular because it supports multiple languages to create deep learning models. It has proper documentation and walks through to the guidance of the user.

PyTorch

“The PyTorch is an open-source machine learning library and used for several applications such as computer vision and natural language processing." It is developed by “facebook's artificial intelligence research group." The PyTorch software is released under the Modified BSD license. It is similar to NumPy in that way it can manage the computation and has a strong GPU (Graphics Processing Unit) support.

Keras

Keras is the deep learning framework and an open-source neural- network library which is written in python. In 2015, march 27, the Keras was developed by “Francois Chollet." It is cross-platform software and supports multiple back-end neural network computation engines. The Keras focuses on being user-friendly, modular, and extensible that provides a clean and convenient way to create a range of deep learning models.

Caffe

The Caffe is an open-source and a deep learning framework. It is developed at the “University of California, Berkeley” and written in C++ with a python interface. This framework is made with the mindset of expression, Speed, and modularity. It has expressive architecture so; the configuration defines models and the optimization without the coding. Speed makes Caffe perfect for research experiments and industrial deployment.

Sonnet

The Sonnet is the framework of deep learning for the implementation of the neural network by using tensor flow. It is developed by the world-famous company “Deep Mind” and design to create the neural network with the complex architecture. The Sonnet is a high-level object-oriented library which can bring the abstraction when developing the neural networks or other machine learning algorithms. It is mainly used to create the primary python object, which is related to the specific part of the neural network. These objects are separately linked to the computational Tensor Flow graph.

MXNet

The MXNet is an open-source deep learning framework and highly scalable tool. It is used to train and arrange the deep neural network and developed by the "Apache Software Foundation." The library of MXNetis portable, scalable to multiple GPUs and different machines. It is supported by public cloud providers, including Amazon web services and Microsoft Azure.

It is a multi-language machine learning library, which is used to ease the development of machine learning algorithms and especially design for the deep neural network. The MXNet is a lean, flexible, and Ultra-scalable framework. It has the fast problem-solving ability and easily maintainable code.

Gluon

Gluon is an open-source deep-learning library and developed by Amazon Web Services and Microsoft. It is used to train and deploy machine learning models in cloud technology.

The Gluon provides inbuilt neural network component as well as a user-friendly programming interface. This makes deep learning projects easier for those programmers or developers, who are not familiar with this technology.

The Gluon can decrease the time and complexity of the training process, which is tightly integrated neural network model with the training algorithm. It also supports the programming loops and batch processing to execute the task efficiently.

The Glucon can be used to create simple as well as sophisticated models of deep learning. It is similar to PyTorch and supports the work with a dynamic graph.

Deeplearning4j

The Eclipse Deeplearning4J is a deep learning programming library, which is written for java and java virtual machine. It is developed by machine learning group headquarters in San Francisco and Tokyo, led by "Adam Gibson."

The DL4J is also a computing framework with wide support of deep learning algorithms. The training of the neural network is passed out in a parallel way through iterations and clusters in deeplearning4J. It is a good platform for the deep learning framework in java.

Advantages

The deep learning does not require feature extraction manually, and it takes images directly as an input. It requires high-performance GPUs and lots of data for processing. The feature removal and classification are carried out by the deep learning algorithms this process is known as convolution neural network.

The performance of deep learning algorithms is improved when the amount of data increased. There are some advantages of deep learning which are given below:

- The architecture of deep learning is flexible to be modified by new problems in the future.

- The Robustness to natural variations in data is automatically learned.

- Neural Network-based approach is applied in many different applications and data type.

Disadvantages

There are several disadvantages of deep learning which are given below:

- We require a very large amount of data in deep learning to perform better than other techniques.

- There is no standard theory to guide you in selecting the right deep learning tools. This technology requires knowledge of topology, training methods, and other parameters. As a result, it is not simple to be implemented by less skilled people.

- The deep learning is extremely expensive to train the complex data models.

- It requires expensive GPUs and hundreds of machines, and this increases the cost of the user.

Prerequisites

1. Artificial Intelligence

Artificial Intelligence is accomplished by studying how the human brain thinks, and how humans learn, decide, and work while trying to solve a problem. The outcome of this study is used as a basis for developing intelligent software and systems.

In short, Artificial Intelligence is the capability of a machine to copy the intelligent human behavior.

Application of Artificial Intelligence

There are millions of applications exists in which Artificial Intelligence is used, but we are explaining three basic applications here, which are given below:

- Speech Recognition.

- Understanding Natural Language.

- Image Recognition.

Now, we should learn how to achieve Artificial Intelligence, so there are various technologies to achieve Artificial Intelligence which is given below:

- Machine Learning

- Deep Learning

The concept of Artificial Intelligence is not the latest. It was first coined in 1956, but it was just a theoretical concept by then. In the 80s and 90s, we started talking about the neural network, but at that time we didn’t have enough computational power so, we couldn’t utilize it properly.

In the late 90s and 2000s, we started using the neural networks for machine learning. In 2006 the term deep learning came into existence. It was used commercially as well from 2010.

2. Machine Learning

Machine learning is nothing but a type of artificial intelligence. We can say that it is a subset of artificial intelligence, and it provides computers with the ability to learn without being explicitly programmed.

Types of Machine learning

There are several types of machine learning which are given below:

A. Supervised Learning

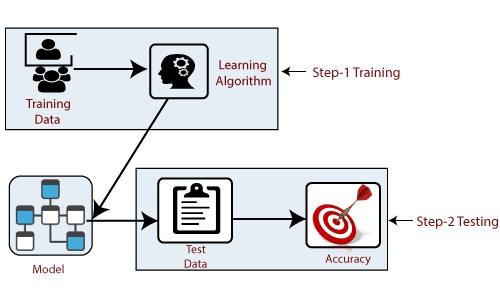

In supervised learning, we have an input variable (x) and an output variable (y), and we can use an algorithm to learn the mapping function from the input to the output. The classification of the data set is already defined in supervised learning.

So, we have the data set, training data as well as test data. We can train our machine based on training data, and after that, we should create a model. To check this model to get the accuracy, we have test data so that we will pass that test data and see the accuracy of the data model. We can say that:

Accuracy = actual output - the output that is present in the test data

B. Unsupervised Learning

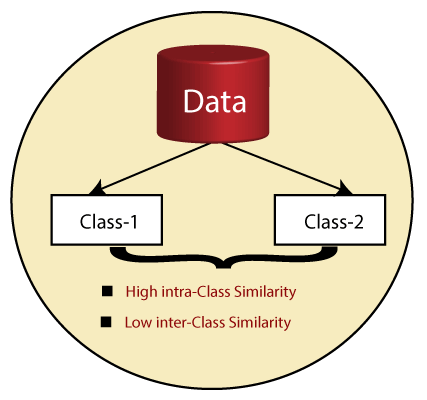

We have data in unsupervised learning, and based on that data, we try to create our class. Try to make sure whatever the class we create has high intraclass similarities. This class has a low inter-class similarity, that means if I have created two class like class 1 and class 2. The elements of the particular class should have high similarity, but at the same time, it should have low similarity with the elements of class 2.

Unsupervised learning is a training of a model using the information that is neither classified nor labeled. This model is used to cluster the input data in classes based on their statistical properties.

This learning allows the algorithm to act on given information or data without guidance. Here the task of machine or model is grouping the unsorted information or data according to similarities, patterns, and differences without any prior training of data.

No teacher will be provided in the unsupervised learning that means no training will be given to the machine or model like supervised learning. Unsupervised learning is classified into two categories of algorithms:

Clustering

The clustering is the process of grouping similar entities together. The purpose of this unsupervised machine learning technique is to find the similarities in the data point and grouped that similar data points together.

Clustering is used to reduce the dimensionality of the data when we are dealing with several numbers of variables. There are many algorithms developed to implement this technique. Two main algorithms are given below:

- K-means Clustering

- Hierarchical Clustering

Association

Association rule learning is the rule-based machine learning method for discovering the interesting relationship between the variables in large databases.

C. Reinforcement Learning

Reinforcement learning is the field of machine learning. It is one of the three basic machine learning paradigms. It is all about making decision sequentially, or we can say that the output depends on the state of the current input, and the next input depends on the output of the previous input.

3. Limitations of Machine Learning

There are several types of limitation of machine learning which are given below:

- Machine learning requires a large amount of handcrafted, structured training data.

- Every narrow application needs to be specially trained in machine learning.

- The learning must generally be supervised, and the training data must be tagged.

- The machine learning requires lengthy offline / batch training.

- Do not learn incrementally or interactively in real-time in machine learning.

Deep learning applications

Deep learning was applied to hundreds of problems, ranging from computer vision to natural language processing. It is heavily used in both academia to study intelligence and in the industry to building intelligent systems to assist humans in various tasks or jobs. There are several applications of deep learning which are given below:

1. Virtual assistants

Virtual assistants or online service provider use deep learning to help understand our speech and language when humans interact with them.

2. Translations

In a similar way, deep learning algorithms can automatically translate between languages. It can be powerful for travelers, business people, and those in government.

3. Vision for driverless delivery trucks, drones, and autonomous cars

An autonomous vehicle understands the realities of the road and how to respond to them, whether it's a stop sign, a ball in the street or another vehicle is through deep learning algorithms.

4. Chatbots and service bots

The chatbots and service bots are providing the customer service for a lot of companies which are able to respond in the intelligent and helpful way.

5. Image colorization

Transforming black-and-white images into color was formerly a task done by the human hand. Nowadays, deep learning algorithms can use the context and objects in the images to color them basically and recreate the black-and-white image in color. The results or outputs are impressive and accurate by using deep learning algorithm.

6. Facial recognition

The deep learning is also used in face recognition not only for security purpose but for tagged the people on Facebook posts. It is a problem of identifying and verifying people in the photograph by their face. Face recognition is the process comprised of detection, alignment, and feature extraction.

Conclusion

The deep learning is the subset of Machine learning where artificial neural network, algorithms inspired by the human brain, learns from a large amount of data. The field of artificial intelligence is essential when machines can do tasks that typically need human intelligence. The deep learning algorithm would perform a task or job repeatedly. We refer to deep learning because the neural networks have several layers that enable learning.