ETL Tutorial

ETL Tutorial

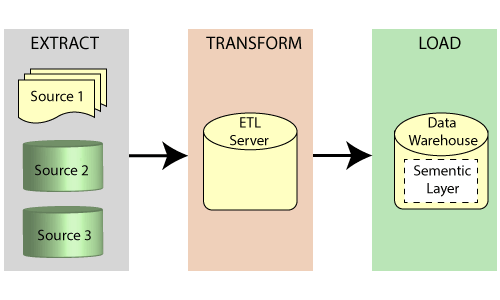

ETL is a process which is use for data extraction from the source (database, XML file, text files, etc.). Transforms the data and then loads the data into the data warehouse.

- What is ETL?

- Working of ETL

- ETL Architecture

- ETL Testing

- ETL Tools

- Installation of Talend

- ETL Pipeline

- ETL Files

- ETL Listed mark

What is ETL?

ETL can be termed as Extract Transform Load. ETL extracts the data from a different source (it can be an oracle database, xml file, text file, xml, etc.).Then transforms the data (by applying aggregate function, keys, joins, etc.) using the ETL tool and finally loads the data into the data warehouse for analytics.

ETL has three main processes:-

- Extraction

- Transform

- Load

Extraction - Extraction is the procedure of collecting data from multiple sources like social sites, e-commerce sites, etc. We collect data in the raw form, which is not beneficial.

Transform - In the transform phase, raw data, i.e., collected from multiple sources, is cleansed and makes it useful information.

Load - In this phase, data is loaded into the data warehouse.

Data warehouse - Data warehouse is a procedure of collecting and handling data from multiple external sources for business intuition.

Working of ETL

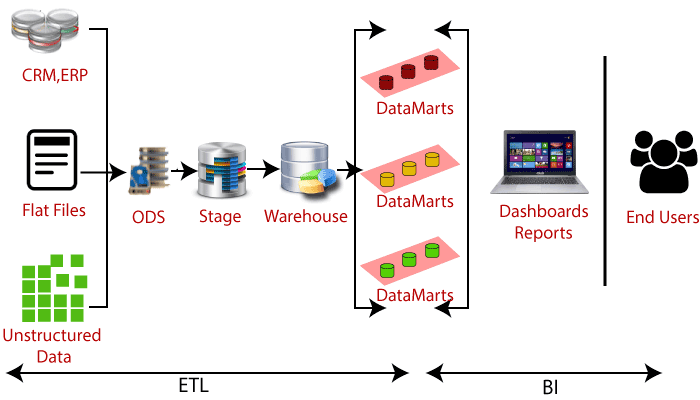

Extract data from multiple different sources. There is no consistency in the data in the OLTP system. You need to standardize all the data that is coming in, and then you have to load into the data warehouse. Usually, what happens most of the companies, banking, and insurance sector use mainframe systems. They are legacy systems. It is old systems, and they are very difficult for reporting. Now they are trying to migrate it to the data warehouse system. So usually in a production environment, what happens, the files are extracted, and the data is obtained from the mainframes. Send it to a UNIX server and windows server in the file format. Each file will have a specific standard size so they can send multiple files as well, depending on the requirement.

Example:- A file is received at 3 am so we process these files using the ETL tool (some of the ETL tools are Informatica, and Talend ). We use any of the ETL tools to cleanse the data. If you see a website where a login form is given, most people do not enter their last name, email address, or it will be incorrect, and the age will be blank. All these data need to be cleansed. There might be a unique character coming in the names. They're usually the case with names where a lot of special characters are included. These data need to be cleansed, and unwanted spaces can be removed, unwanted characters can be removed by using the ETL tools. Then they are loaded to an area called the staging area. In the staging area, all the business rules are applied. Suppose, there is a business rule saying that a particular record that is coming should always be present in the master table record. If it is not present, we will not be moving it further. We will have to do a look at the master table to see whether the record is available or not.

If it is not present, then the data retains in the staging area, otherwise, you move it forward to the next level. Then we load it into the dimension now. Schedulers are also available to run the jobs precisely at 3 am, or you can run the jobs when the files arrived. It can be time dependency as well as file dependency. Manual efforts in running the jobs are very less. At the end of the job runs, we will check whether the jobs have run successfully or if the data has been loaded successfully or not.

Need of ETL

- ETL is a tool that extracts, transform, and load raw data into the user data.

- ETL helps firms to examine their business data to make critical business decisions.

- It provides a technique of transferring the data from multiple sources to a data warehouse.

- Transactional databases do not answer complicated business questions, but ETL can be able to answer this question.

- ETL is a pre-set process for accessing and refining data source into a piece of useful data.

- When the data source changes, the data warehouse will be updated.

- Properly designed and validated ETL software is essential for successful data warehouse management.

- It helps to improve productivity because it is simplified and can be used without the need for technical skills.

ETL architecture

In this era of data warehousing world, this term is extended to E-MPAC-TL or Extract Transform and Load. Or we can say that ETL provides Data Quality and MetaData.

Extract - In this phase, data is collected from multiple external sources. The collected data is in the raw form, which is coming in the form of flat file, JSON, Oracle database, etc. That data is collected into the staging area. The staging area is used so that the performance of the source system does not degrade.

Staging area filters the extracted data and then move it into the data warehouse

There are three types of data extraction methods:-

- Full Extraction

- Partial Extraction- without update notification.

- Partial Extraction- with an update notification

Transform - In this phase, we have to apply some operations on extracted data for modifying the data. The main focus should be on the operations offered by the ETL tool. In a medium to large scale data warehouse environment, it is necessary to standardize the data in spite of customization. ETL cuts down the throughput time of different sources to target development activities, which form the most of the long-established ETL effort.

Load - It is the last phase of the ETL process. In this phase, data is loaded into the data warehouse. In a data warehouse, a large amount of data is loaded in an almost limited period of time.

In the case of load failure, recover mechanisms must be designed to restart from the purpose of failure without data integrity loss. Data Warehouse admin has to monitor, resume, cancel load as per succeeding server performance.

There are three types of loading methods:-

- Initial Load

- Incremental Load

- Full Refresh

Monitoring - In the monitoring phase, data should be monitored and enables verification of the data, which is moved all over the whole ETL process. It has two main objectives. Firstly, the data must be screened. There is a proper balance between filtering the incoming data as much as possible and not reducing the overall ETL-process when too much checking is done. There is an inside-out approach, defined in the Ralph Kimball screening technique should be used.

This method can take all errors consistently, based on a pre-defined set of metadata business rules and permits reporting on them through a simple star schema, and verifies the quality of the data over time. Secondly, the performance of the ETL process must be closely monitored; this raw data information includes the start and end times for ETL operations in different layers.

You should also capture information about processed records (submitted, listed, updated, discarded, or failed records). This metadata will answer questions about data integrity and ETL performance. Metadata information can be linked to all dimensions and fact tables such as the so-called post-audit and can, therefore, be referenced as other dimensions.

Quality assurance - These processes can verify that the value is complete; Do we still have the same number of records or total metrics defined between the different ETL phases? This information must be captured as metadata. Finally, the data voltage must be predicted throughout the ETL process, including error records.

Data profiling - Data profiling is used for generating statistics about the source. Its goal is to focus on the sources. It uses analytical processes to find out the original content, quality, and structure of the data through decoding and validating data patterns and formats. It is necessary to use the correct tool, which is used to automate this process. It gives a large and varied amount of data.

Data analysis - Data analysis is used to analyze the result of the profiled data. This makes data analysis easier for identifying data quality problems, for example, missing data, invalid data, inconsistent data, redundant data. It is necessary to capture the correct result of this assessment. It will become the means of communication between the source and the data warehouse team to address all outstanding issues. Also, the above transformation activities will benefit from this analysis in terms of proactively addressing the quality of perceived data. Assignment activities from origin to destination largely depend on the quality of the source analysis.

Source analysis - Within source analysis, the approach should focus not only on sources "as they are, but also on their environment; obtaining appropriate source documentation, future roadmap for source applications, getting an idea of current source (data) problems, and corresponding data models (E schemes) It is essential to have frequent meetings with resource owners to discover early changes that may affect the data warehouse and its associated ETL processes.

Cleansing - In the cleansing phase, you can correct errors found based on a predefined set of metadata rules. There you must distinguish between the complete or partial rejection of the record. And also allow manual correction of the problem or fixing the data, for example, correcting inaccurate data fields, adjusting the data format, etc.

E-MPAC-TL is an extended ETL concept that tries to balance the requirements correctly with the reality of the systems, tools, metadata, problems, technical limitations, and, above all, the data (quality) itself.

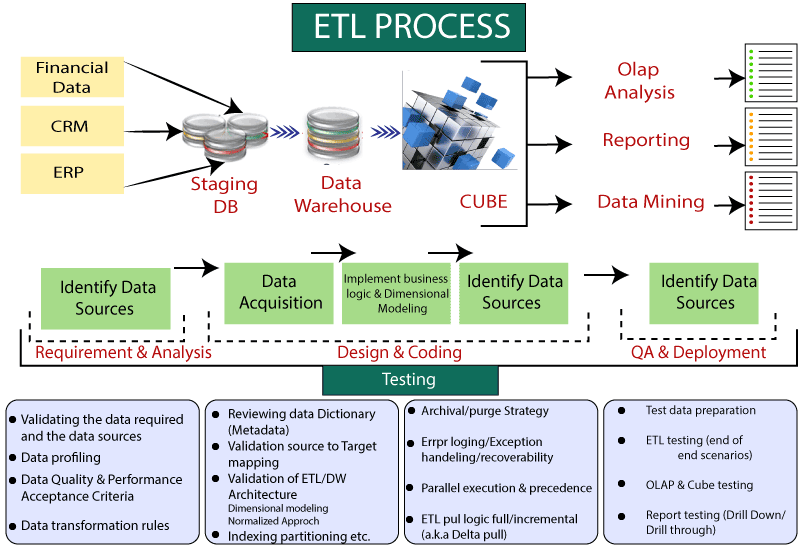

ETL Testing

What is ETL Testing?

ETL testing is used to ensure that the data which is loaded from source to target after business modification is useful or not. ETL Testing also includes data verification at different stages that are used between the source and target.

ETL Testing process

As with other testing processes, ETL also goes through different phases. The various steps of the ETL test process are as follows.

ETL testing has five stages

- Identify data sources and requirements.

- Data acquisition.

- Implementation of business logic and dimensional modeling.

- Build and complete data.

- Construction report.

Advantages of ETL Testing

1. In ETL testing, it extracts or receives data from the different data sources at the same time.

2. ETL can store the data from various sources to a single generalized \ separate target at the same time.

3. ETL can load multiple types of goals at the same time.

4. ETL can extract demanded business data from various sources and should be expected to load business data into the different targets as the desired form.

5. ETL can make any data transformation according to the business.

Disadvantages of ETL Testing

1. Only data-oriented developers or database analysts should be able to do ETL Testing.

2. ETL Testing is not optimal for real-time or on-demand access because it does not provide a fast response.

3. In any case, the ETL will last for months.

4. ETL testing will take a very long time to declare the result.

ETL tools

ETL tools are the software that is used to perform ETL processes, i.e., Extract, Transform, Load. ETL tools are the software that is used to perform ETL processes. In today's era, a large amount of data is generated from multiple sources, organizations, social sites, e-commerce sites, etc.

With the help of ETL tools, we can implement all three ETL processes. The data which is collected from the multiple sources transforms the data and, finally, load into the data warehouse. This refined data is used for business intelligence.

Types of ETL Tools

- Talend Open Studio for Data Integration

- RightData

- Informatica Data Validation

- QuerySurge

- ICEDQ

- Datagaps ETL Validator

- QualiDI

- Codoid's ETL Testing Services

- Data Centric Testing

- SSISTester

- TestBench

- GTL QAceGen

- Zuzena Automated Testing Service

- DbFit

- AnyDbTest

- 99 Percentage ETL Testing

Talend Data Integration

Talend Data Integration is an open-source testing tool that facilitates ETL testing. It includes all ETL testing features and an additional continuous distribution mechanism. With the help of the Talend Data Integration Tool, the user can perform ETL tasks on the remote server with different operating systems.

The ETL testing makes sure that data is transferred from the source system to a target system without any loss of data and compliance with the conversion rules.

Right Data

Right Data is an ETL testing/self-service data integration tool. It is designed to assist business and technical teams in ensuring data quality and automating data quality control processes.

Its interface allows users to validate and integrate data between data sets related to the type of data model or type of data source.

The right data is designed to work efficiently for a more complex and large-scale database.

Informatica

Information Data Validation is a GUI-based ETL test tool that is used to extract [Transformation and Load (ETL)]. The testing compares tables before and after data migration. This type of test ensures data integrity, meaning that the size of the data is loaded correctly and in the format expected in the target system.

QuerySurge

The QuerySurge tool is specifically designed to test big data and data storage. This ensures that the data retrieved and downloaded from the source system to the target system is correct and consistent with the expected format. QuerySurge will quickly identify any issues or differences.

iCEDQ

iCEDQ is an ETL automated test tool designed to address the problems in a data-driven project, such as data warehousing, data migration, and more. iCEDQ verifies and compromise between source and target settings. This ensures data integrity after migration and avoids loading invalid data on the target system.

Data gaps ETL Validator

The ETL validator tool is designed for ETL testing and significant data testing. This solution is for data integration projects. Testing such a data integration program involves a wide variety of data, a large amount, and a variety of sources. ETL validator helps to overcome such challenges through automation, which helps to reduce costs and reduce effort.

QualiDI

QualiDi is an automated testing platform that provides end-to-end and ETL testing. It automates ETL testing and improves ETL testing performance. This shortens the test cycle and enhances data quality. QualiDi identifies bad data and non-compliant data. QualiDi reduces the regression cycle and data validation.

Codoid's ETL Testing Services

Codoid's ETL testing and data warehouse facilitate the data migration and data validation from the source to the target. ETL testing helps to remove bad data, data error, and loss of data while transferring data from source to the target system. It quickly identifies data errors or other common errors that occurred during the ETL process.

Data-Centric Testing

The data-centric testing tool performs robust data verification to prevent failures such as data loss or data inconsistency during data conversion. This compares the data between the systems and ensures that the data loaded on the target system matches the source system in terms of data size, data type, and format.

SSISTester

SSISTester is a framework that facilitates unit testing and integration of SSIS packages. It helps to create ETL processes in a test-driven environment, and also helps to identify errors in the development process. Several packages have been developed when implementing ETL processes, which must be tested during unit testing. An integration test is "direct tests."

Benefits of ETL Tools

Using ETL tools is more useful than using the traditional method for moving data from a source database to a destination data depository.

Easy to use - The main advantage of ETL is that it is easy to use. The tool itself identifies data sources, data mining and processing rules, and then performs the process and loads the data. ETL eliminates the need for coding, where we have to write processes and code.

Operational Flexibility - Many data warehouses are damaged and cause operational problems. ETL tools have a built-in error handling function. This functionality helps data engineers to build ETL tool functions to develop improved and well-instrumented systems.

Visual Flow - ETL tools rely on the GUI (Graphical User Interface) and provide a visual flow of system logic. The graphical interface helps us to define rules using the drag and drop interface to describe the flow of data in the process.

Performance - The ETL platform structure simplifies the process of building a high-quality data storage system. Many ETL tools come with performance optimization techniques such as block recognition and symmetric multiprocessing.

Enhances Business Intelligence - ETL tools improve data access and simplify extraction, conversion, and loading. It Improves access to information that directly affects the strategic and operational decisions based on data-based facts. ETL also enables business leaders to retrieve data based on specific needs and make decisions accordingly.

How to make Test Cases in ETL Testing

The first objective of ETL testing is to determine the extracted and transmitted data are loaded correctly from source to destination. The ETL testing consists of two documents, namely:

ETL Mapping Sheets: This document having information about source code and destination table and their references. This document provides help for creating large SQL queries during ETL testing.

The database schema for Source and Destination table: It must be kept updated in the mapping sheet with database schema to perform data validation.

How to install Talend

Talend is an ETL tool, and there is a free version available you can download it and start building your project. So let's begin.

Pre-requisite

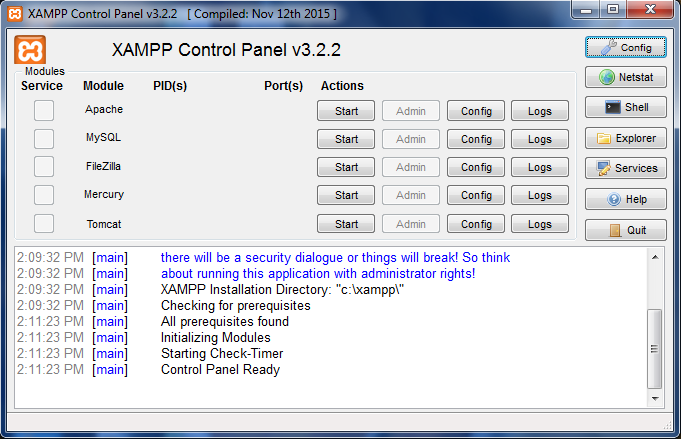

The pre-requisite for installing Talend is XAMPP. So let us start installing the XAMPP first.

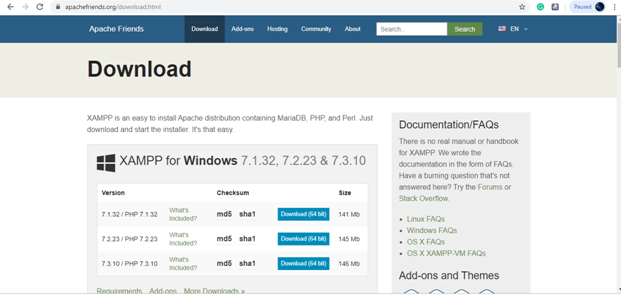

Search on google for XAMPP and click on the link make sure you select the right link based on the operating system (Window, Linux, Mac) and its architecture (32 bit, 64 bit).

Steps for downloading XAMPP

Link for XAMPP download.

https://www.apachefriends.org/download.html

1. Click on the download button.

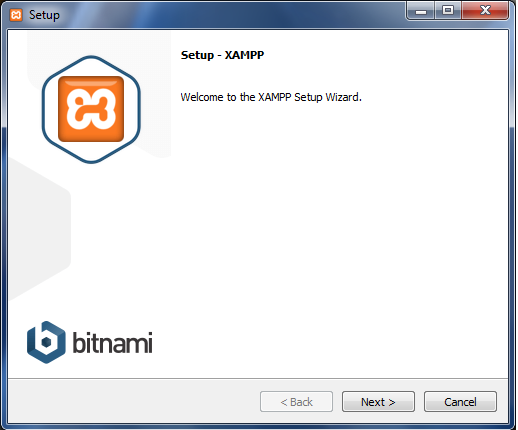

2. First of all, it will give you this kind of warning. You need to click on Yes.

3. Click on the NEXT.

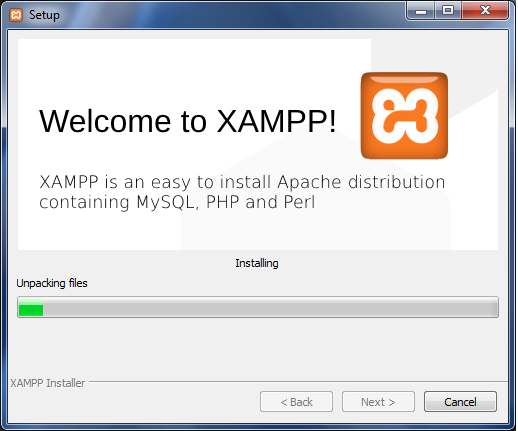

4. Now the installation will start for XAMPP. Just wait for the installation to complete.

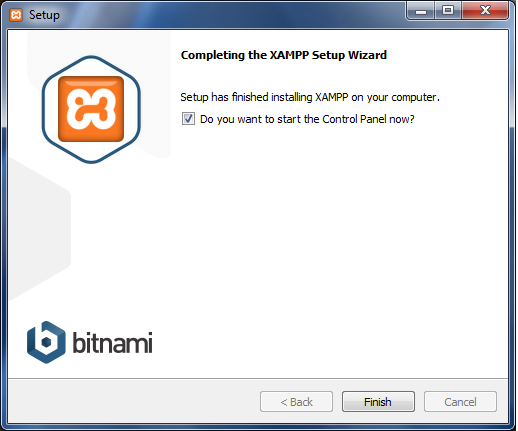

5. The installation for the XAMPP web server is completed.

6. Click on the finish button.

7. Then it is going to start this type of control panel for XAMPP.

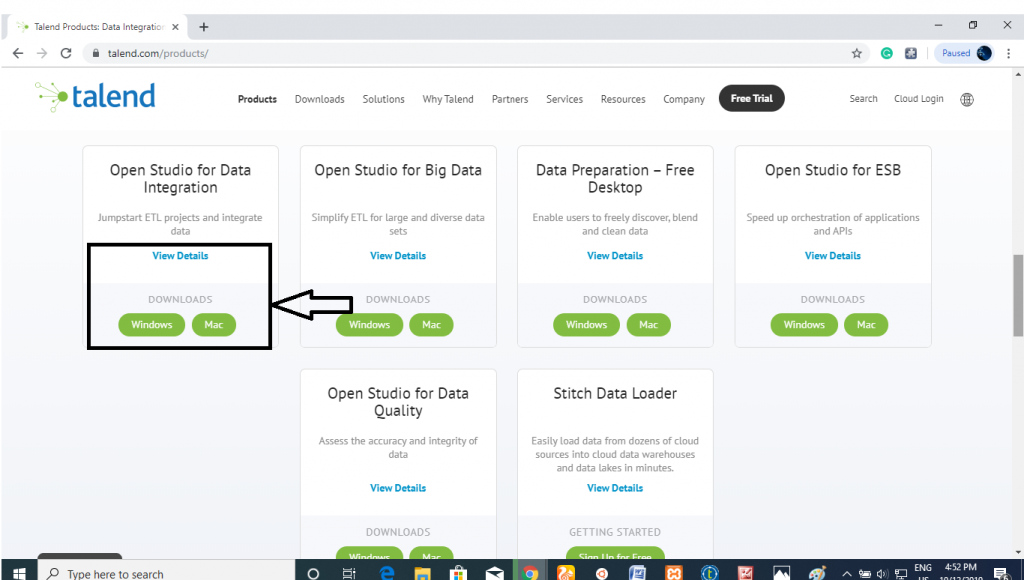

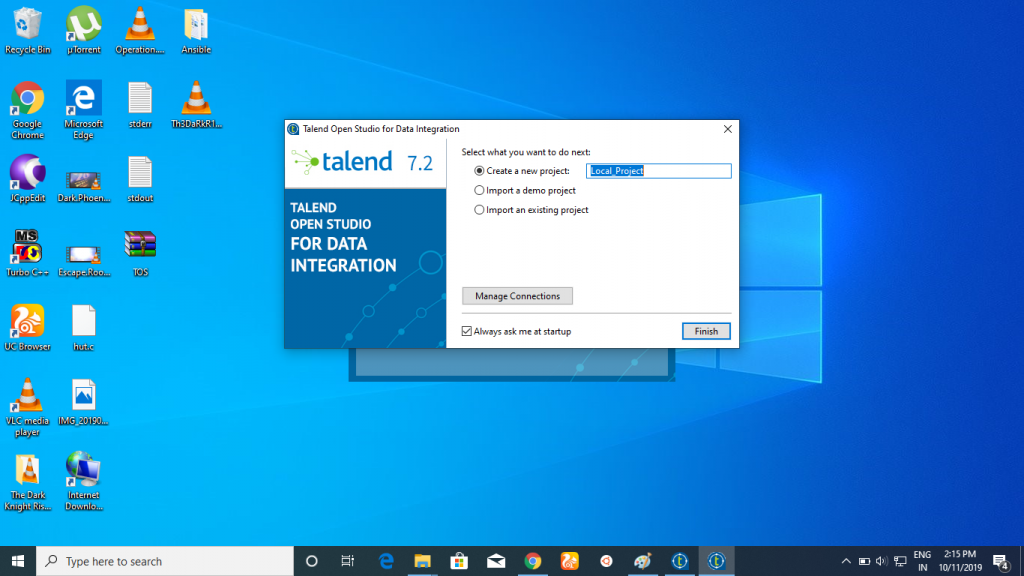

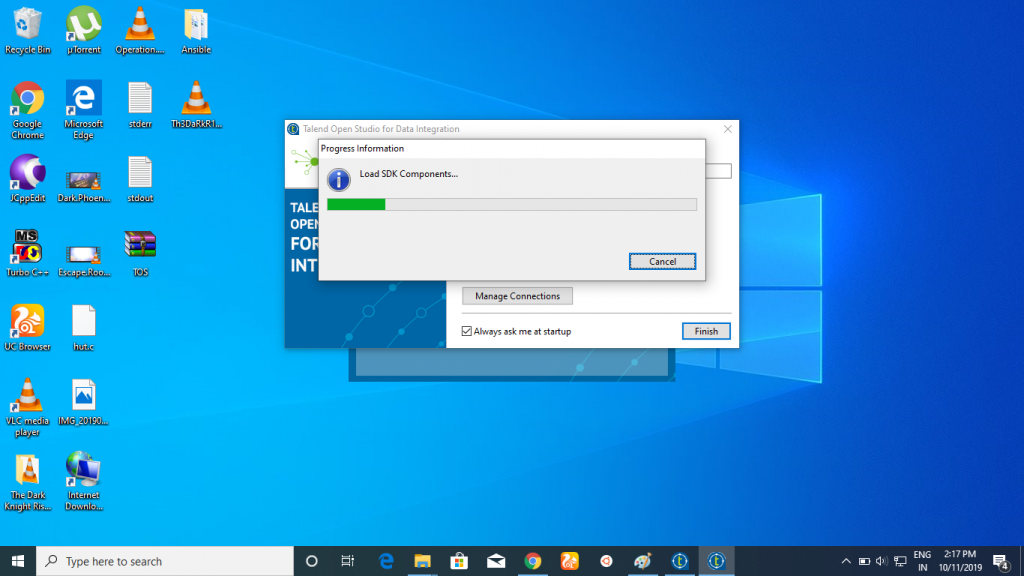

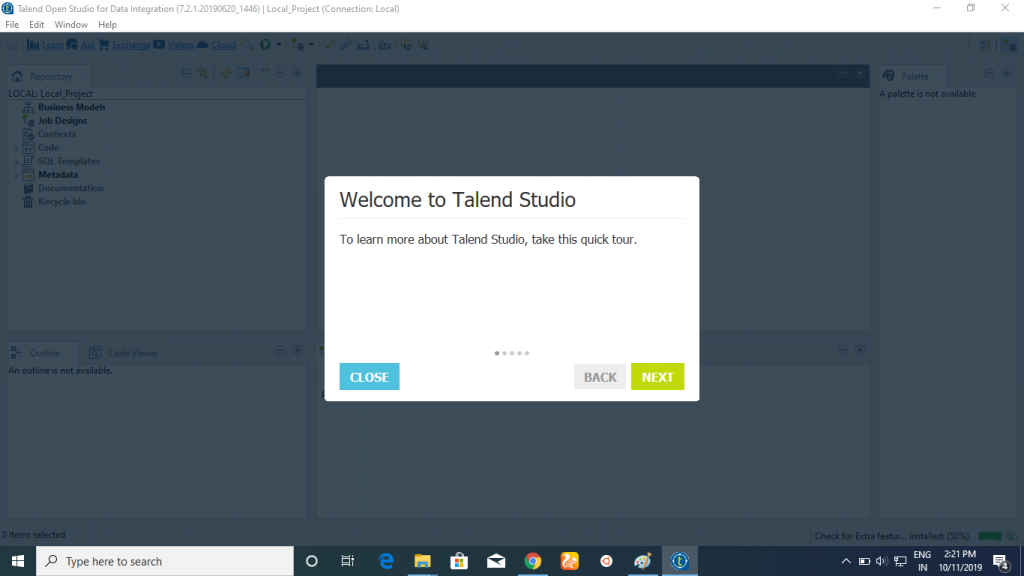

Steps for downloading Talend for Data Integration

Link for Talend download:-

https://www.talend.com/products/data-integration/data-integration-open-studio/

1. Click on the Windows.

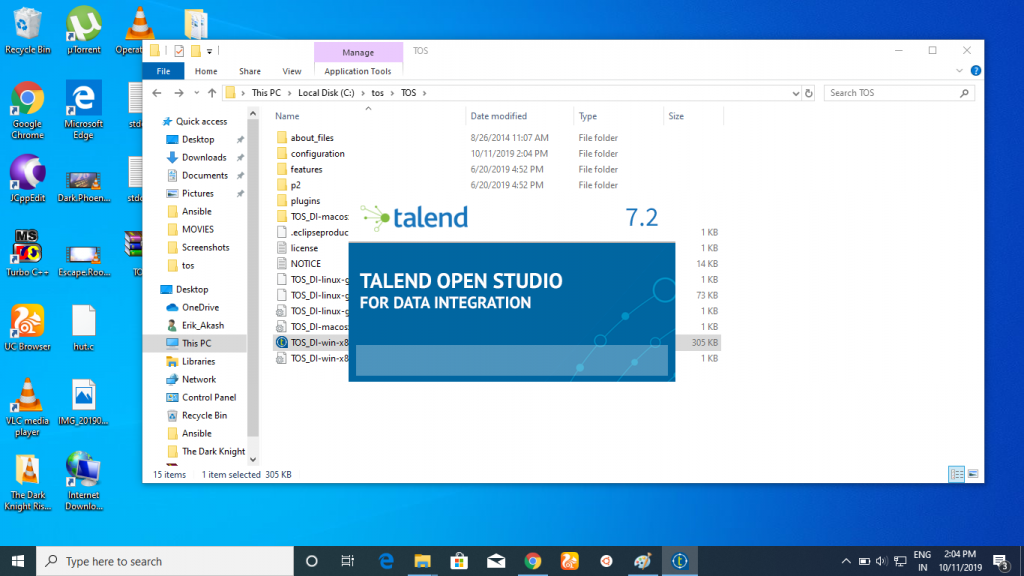

2. It will open up very quickly. Also, make sure when you launch Talend, you do have an active internet connection.

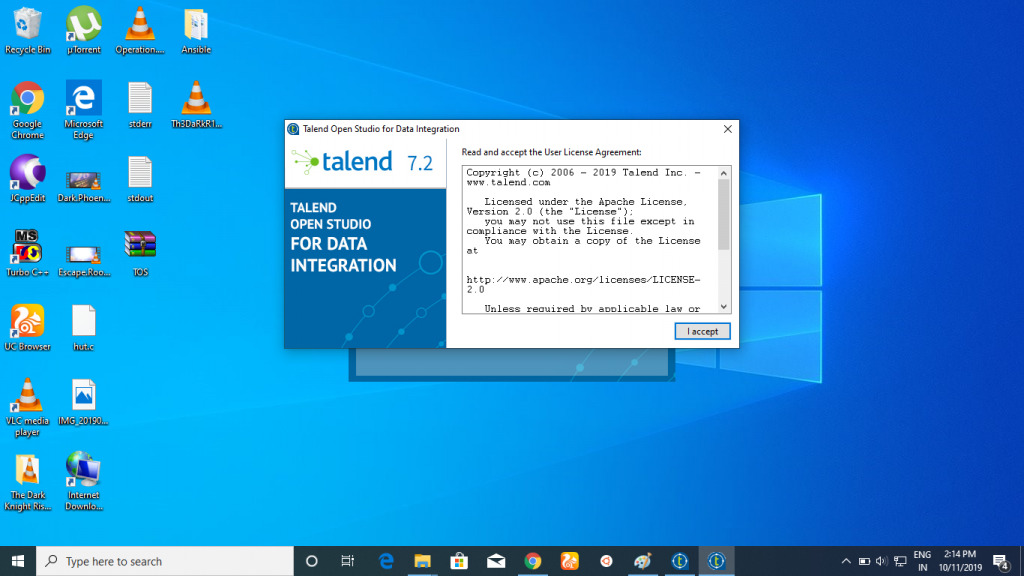

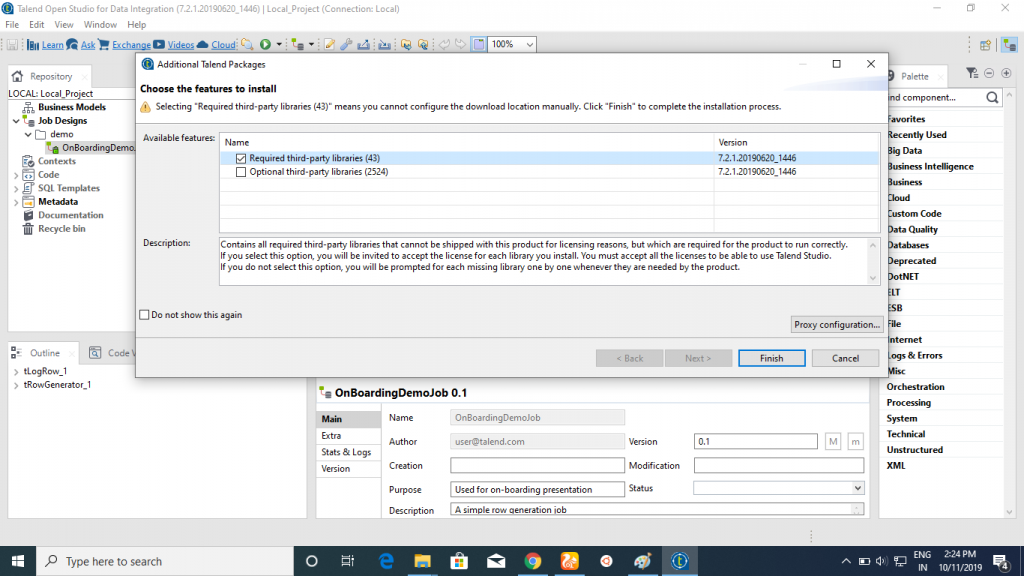

3. Click on, I accept.

4. Click on Finish.

5. Click on next-next and so on.

6. Click on Close.

7. Click on Finish.

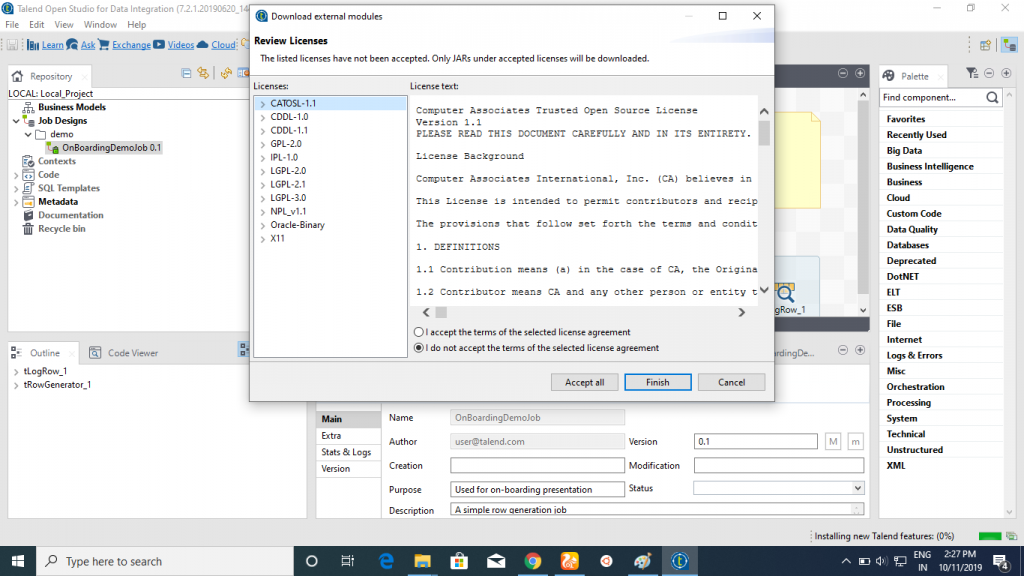

8. Click on Accept all and then Finish.

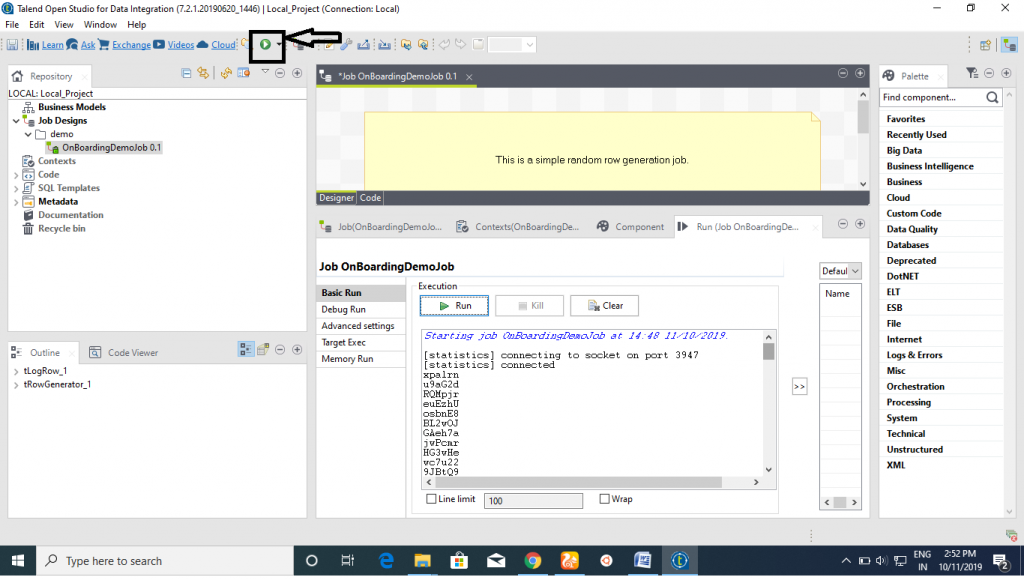

9. Click on the run to make sure the talend is downloaded properly or not.

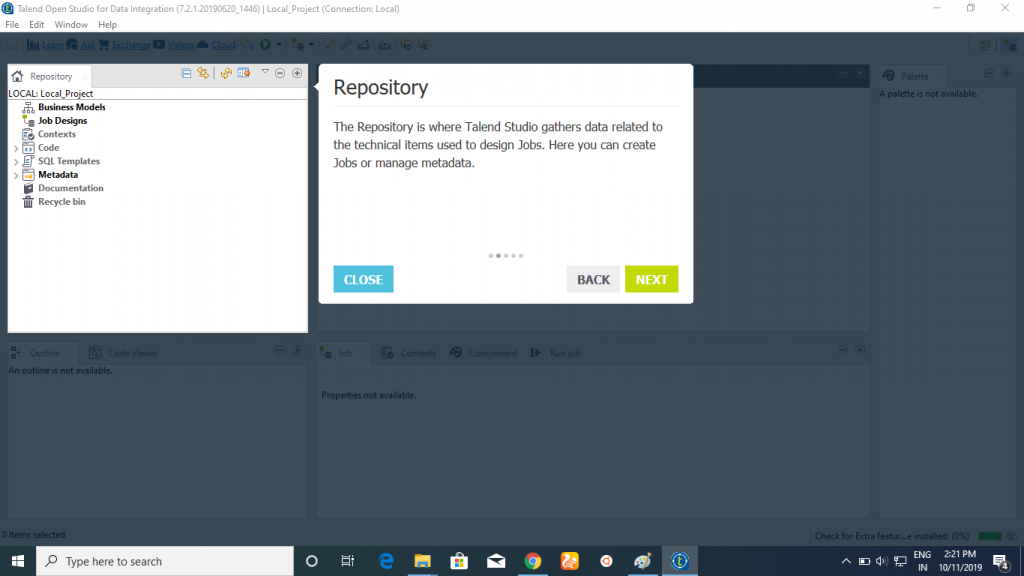

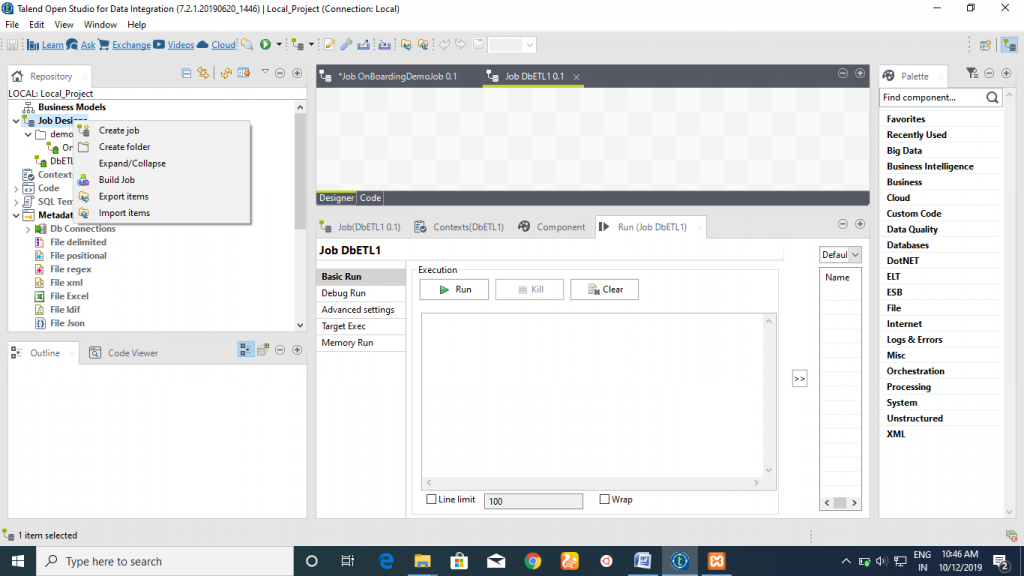

Steps for connecting Talend with XAMPP Server:

1. Start XAMPP server.

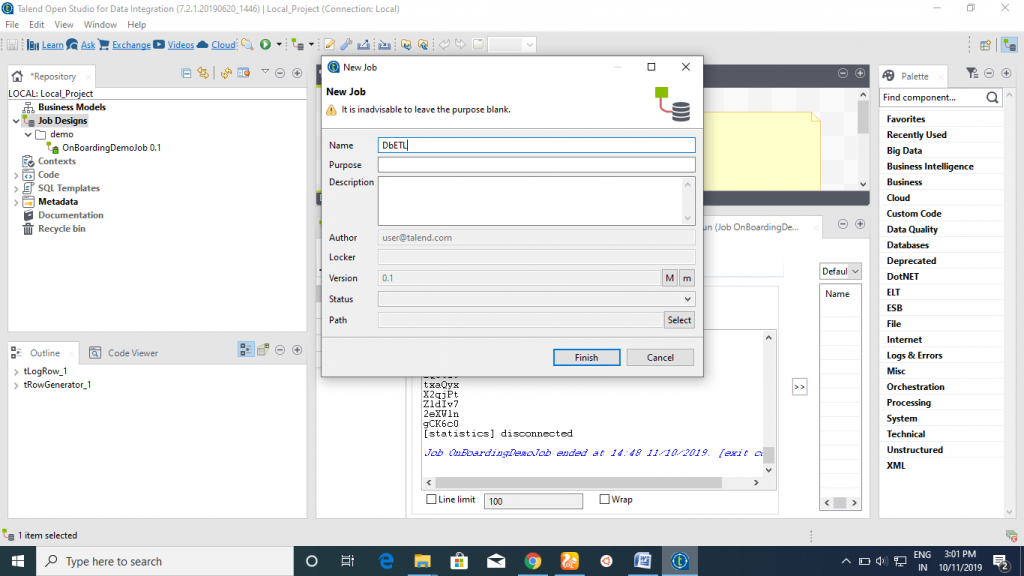

2. Click on the Job Design. Then click on the Create Job.

3. Fill the Name column. Click on the Finish.

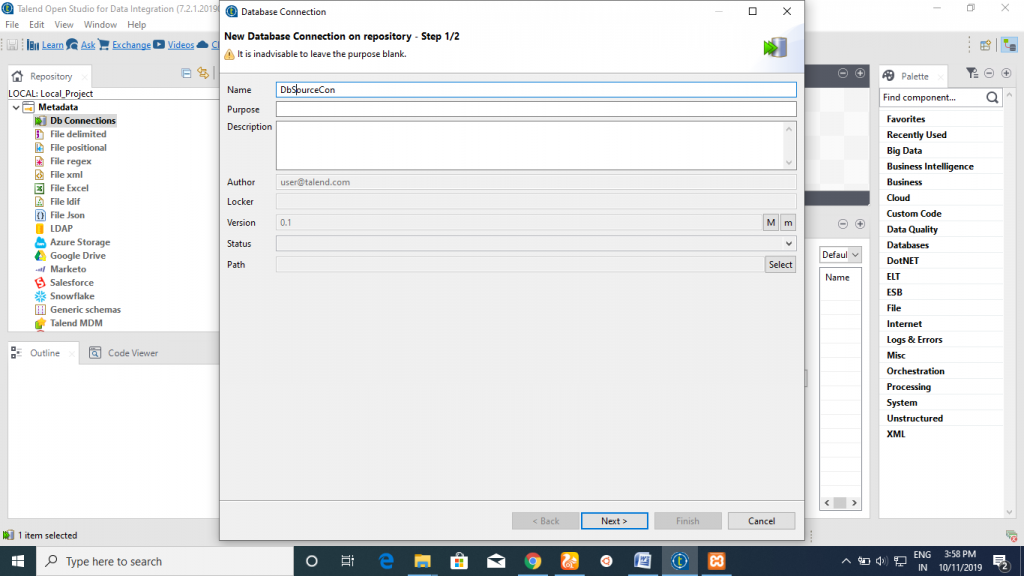

4. Then click on the Metadata. Under this you will find DbConnection. Right-click on the DbConnection then click on Create Connection, and then the page will be opened. Click on the Next.

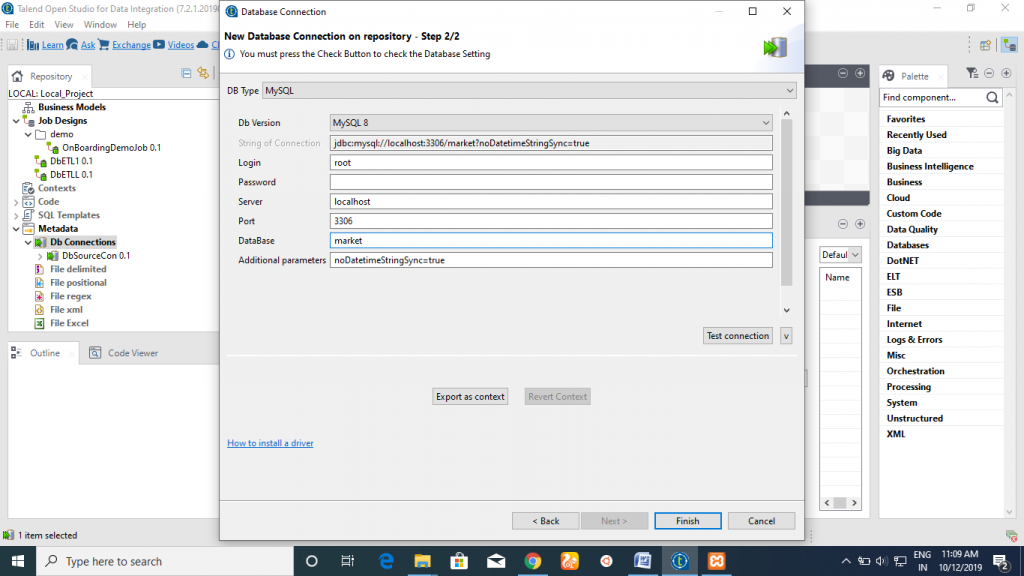

5. Fill the required columns. Click on Test Connection.

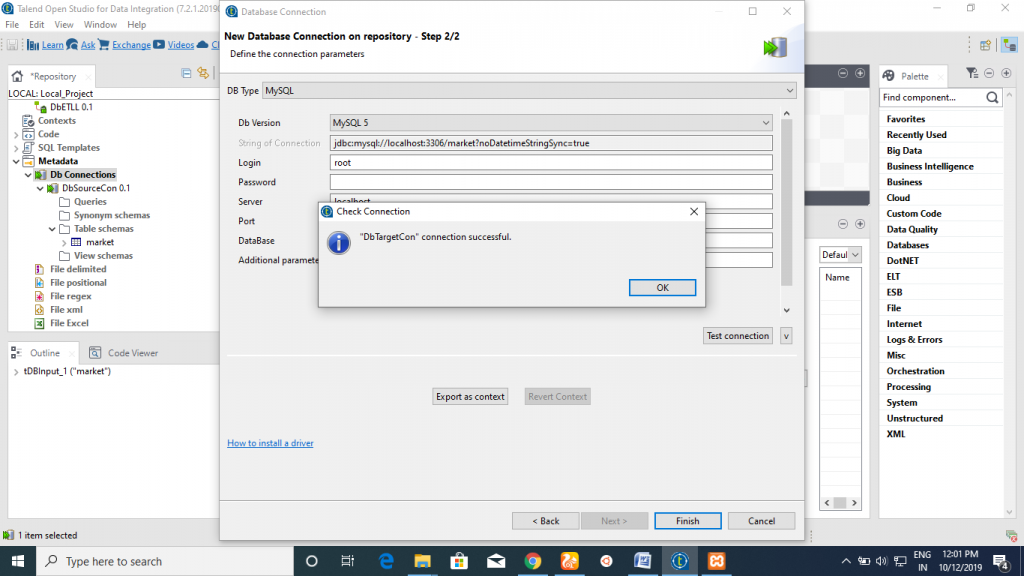

6. Your Connection is successful. Then click on Finish.

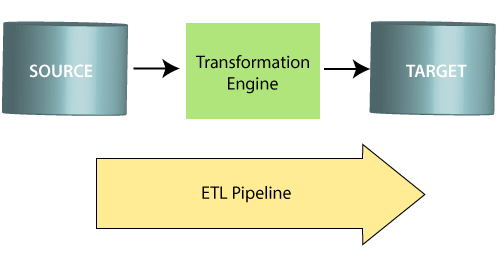

ETL Pipeline

An ETL pipeline refers to a collection of processes that extract data from an input source, transform data, and load it to a destination, such as a database, database, and data warehouse for analysis, reporting, and data synchronization.

Extract - Data must be extracted from various sources such as business systems, APIs, marketing tools, sensor data, and transaction databases, and others. As you can see, some of these data types are structured outputs of widely used systems, while others are semi-structured JSON server logs.

Transform - In the second step, data transformation is done in the format, which is used by different applications. It converts in the form in which data is stored. It also changes the format in which the application requires the data.

Load - The information now available in a fixed format and ready to load into the data warehouse. From now on, you can get and compare any particular data against any other part of the data.

Data warehouses can be automatically updated or run manually.

ETL Files

ETL files are log files created by Microsoft Tracelog software applications. Microsoft creates event logs in a binary file format. In the Microsoft operating system, the kernel creates the records. ETL logs contain information about how to access disk and page faults, how to record Microsoft operating system performance, and how to record a high-frequency event.

Eclipse Open Development Platform also uses the .etl file extension. The platform creates the file that is stored in the .etl file extension.

ETL files are stored on disk, as well as their instability and changes to the data they contain. When a tracing session is first configured, settings are used for how to store log files and what data to store. Some logs are circular with old data that is changed by the files when it is possible to resize. Windows stores information in ETL files in some cases, such as shutting down the system, updating when another user is logged into the system, or more.

ETL vs. Database Testing

ETL and database testing performs Data validation. ETL testing works on the data in a data warehouse, but Database testing works on transactional systems where the data comes from the multiple sources.

There are some significant differences between ETL testing and Database testing:-

Database type - Database testing is used on the OLTP systems, and ETL testing is used on the OLAP systems.

Modeling - In Database testing, the ER method is used, whereas, in ETL Testing, the multidimensional approach is used.

Data Type - Database Testing uses normalized data with joins, but ETL Testing has the data in de-normalized form data with fewer joins, more indexes, and aggregations.

Business Need - Database testing used to integrate data from different sources, whereas ETL Testing is used for analytical reporting and forecasting.

Primary Goal – In database testing, data validation and Integration is done, but in ETL Testing Extraction, Transform and loading is performed for business intelligence.

ETL LISTED MARK

Intertek's ETL certified program is designed to help us to test, approve, and grow the product on the market faster than ever. ETL was created in the culture of innovation. The ETL program began in Tomas Edison's lab.

UL and ETL both are known as National Nursing Testing Laboratories (NRTL). NRTL provides independent certification and product quality assurance. Electrical equipment requires certification. Before buying electronics, it is important to check the ETL or UL symbol.

UL develops the testing pattern and tests them. ETL testing is done according to UL standards.

The ETL Listed Mark is used to indicate that a product is being independently tested to meet the published standard.

Products with ETL Listed mark are:-

- Domestic electrical product.

- Medical equipment.

- Building material.

- Telephone and communication equipment.

- Industrial equipment.

ETL verification

ETL verification provides a product certified mark that makes sure that the product meets specific design and performance standards. ETL certification guarantees the highest quality and reliability for a product, assuring consumers that a product has reached a high standard.