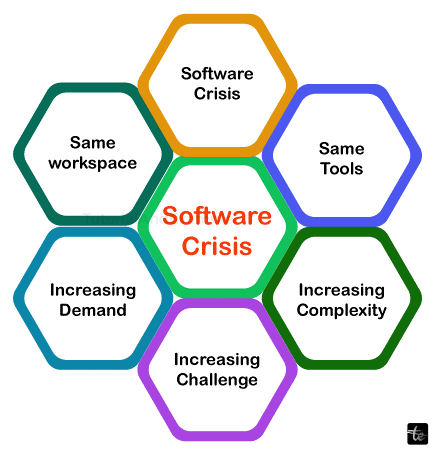

What is a Software Crisis?

In the initial stages of the field of computing, the phrase "software crisis" was used to describe the challenge of building effective and usable computer programs within the allotted time. The intricacy of the issues that were impossible to resolve and the quick rise in computer power were the causes of the software crisis. Many software issues emerged as a result of the poor methodologies used at the time, which contributed to the software's increasing complexity.

Past Events

A few delegates at the 1968 NATO Software Engineers Conference in Garmisch, Germany, came up with the term "software crisis." Edsger Dijkstra mentioned this identical issue in his Turing Award lecture from 1972.

The computers' increased power by a few orders of magnitude is the main reason behind the software dilemma! To put it clearly, programming was not a difficulty at all while there were no machines; it became a little issue when we had a handful of weak computers, and it has become an even bigger one now that we have enormous computers.

Reasons

The total difficulty of hardware in the software process of development was identified as a contributing factor to the software crisis.

The problem showed up in a number of ways:

- Overspending on projects

- Long-term projects

- The software could have been more effective.

- The software needed to be better.

- Software frequently failed to fulfil specifications

- Code was hard to maintain and projects were unmanageable; software was never delivered.

The primary reason for this is that advances in computer power have surpassed programmers' capacity to utilize them efficiently. Procedural and object-oriented programmings are only two of the techniques and approaches that have been created over the last several decades that have enhanced software quality management. Enormous, unexpected difficulties can nevertheless arise in complex applications, poorly described, contain novel components, or are otherwise enormous in scope.

Optimization of Programs

The process of changing a software system such that a component functions more effectively or consumes fewer resources is known as program optimization, code optimization, or software optimization in computer science. Generally speaking, an application for a computer can be optimized to run faster, need less memory or other resources to run, or use less power.

Overall

Despite the fact that the words "optimization" and "optimal" have the same root, really optimum systems are not always the result of optimization. Generally speaking, a system may only be considered optimum in relation to a certain quality metric which may differ from other potential metrics rather than in absolute terms. Because of this, an optimized system is usually best suited for a single application or target audience. It is possible to speed up a program's execution of a job at the expense of increased memory use.

One could use a slower method in an application when memory space is limited in order to utilize less memory. Engineers must make trade-offs in order to maximize the features that are most important because there is rarely a "universal" design that works effectively in every situation. Furthermore, it is nearly always more work than is prudent for benefits that would result in a piece of programming being completely optimal, incapable of being improved further. As a result, the optimization process may stop before an altogether optimal solution is reached. Thankfully, the biggest advancements frequently occur at the beginning of the process.

Most optimization techniques, even for an identified metric (such as execution speed), merely serve to enhance the outcome; they make no pretence of generating optimum output. Finding genuinely optimum output is an instance of super optimization.

Optimization Levels

Optimization can take place on several levels. Higher levels usually have a bigger influence and are more difficult to modify later in a project; if they ought to be modified, a full rewrite or substantial adjustments must be made. Therefore, optimization may usually be done by refining from higher to simpler levels, where greater gains can be made at the beginning with less effort, and smaller gains need more effort later on. But occasionally, a program's overall effectiveness hinges on how well its lowest-level components operate, and even seemingly insignificant adjustments made later on or at an early stage might have a significant influence.

While efficiency is often taken into account during a project (though this varies greatly), considerable optimization is sometimes thought of as a tweak that should be done later. Longer-term projects usually go through cycles of optimization, wherein improvements in one area show limits in another one and these are usually stopped when the benefits become too modest or expensive or the performance is acceptable.

Performance is taken into consideration from the beginning to ensure that the architecture is able to deliver adequate performance. Early prototypes must have roughly acceptable performance in order to be confident that the finished product will (with optimization) achieve acceptable performance. This is because a program's specification states that an unusably slow program is not fit for purpose. For example, a video game with 60 Hz (frames per second) is acceptable, but 6 frames per second could be more manageable.

Sometimes, this is left out because optimization is always feasible later. This leads to initial systems that are substantially slow often by an order magnitude or more and systems that fail because they are unable to achieve the desired performance architecturally, like Intel's 432 (1981), or systems that require years of work to reach an acceptable level of performance, like Java (1995), which was only able to do so with the help of HotSpot (1999). A major source of risk and uncertainty might be the level at which a production system's performance differs from that of a prototype or the extent to which it can be optimized.

Level of Design

Optimizing a design to maximize its use of resources, goals, restrictions, and predicted load may be done at the highest level. The performance of a system is greatly influenced by its architectural design. A structure that is network latency-bound, for instance, would be optimized to reduce the number of link trips, ideally delivering a single request (or none at all, in the case of a push protocol) as opposed to several roundtrips.

The objectives determine the design choice. For example, if producing fast compilation is the primary goal, a one-pass compiler will perform faster than a multi-pass compiler (assuming the same amount of work). On the other hand, if producing fast output code is the primary net, a slower multi-pass compilation will achieve the goal more effectively, even though it will take longer.

At this point, platform and programming dialect choices are made, and altering them typically necessitates a whole rewrite; however, modular systems may permit rewriting of only a portion of the system; for instance, performance-critical portions of a Python program may be rewritten in C. The architecture of a distributed system (client-server, peer-to-peer, etc.) is chosen at the design stage. It might be challenging to modify, especially if some parts of the system (old clients, for example) cannot be replaced simultaneously.

Data Structures and Algorithms

Following an overall design, selecting effective data structures and algorithmic methods, and implementing them efficiently are the following steps. Efficiency is mostly determined by the data structure and algorithm structures chosen after design, more so than by any other factor in the program. Since a data structure and its functioning assumptions can be utilized throughout the program, data structures are typically more challenging to change than algorithms. However, this can be mitigated by using data types that are abstract in function definitions and limiting the use of concrete data structure clarifications to a small number of locations.

Making sure techniques are uniform O(1), logarithmic O(log d), linear O(n), or occasionally minus-linear O(n log n), depending on the numbers in the data being processed (both in space and time) is the main task here. Constant or logarithmic algorithms are usually preferred over processes with quadratic complexity O(n2), as even linear algorithms become problematic when used frequently.

Beyond the climactic order of growth, the variables that remain constant are important. In the scenario of both asymptotically slower and asymptotically faster algorithms being confronted with tiny input, which may be the case in practice, the slower algorithm may be quicker or smaller (due to the simpler) than the faster algorithm. Hybrid algorithms often achieve optimal performance since the tradeoff varies with size.

Avoiding work is a common strategy to increase performance. Using a fast path in typical circumstances is an excellent illustration of how to improve efficiency by reducing needless labour. For Latin text, for instance, one may use a straightforward text layout method; for more complicated scripts, like Devanagari, one would use a more sophisticated layout technique. Caching, especially memoization, is another crucial method for preventing unnecessary calculations. Because caching is so important, systems frequently have many levels of caching, which can lead to difficulties with memory use and stale caches that affect accuracy.

Level of Source Code

Concrete programming-level decisions can have a substantial impact, even when considered in isolation from generic algorithms and their abstract machine implementation. For instance, while(1) calculated 1 and then had a precondition jump that tested if it was true, but for(;;) had an unconditional leap, which is why while(1) was worse than for(;;) for an unrestricted loop on early C compilers.

Compilers may now be optimized to execute some optimizations, including this one. This is a crucial area where knowledge of compilers and code for machines may enhance efficiency; it depends upon the language of the source code, the machine that is receiving the language, and the compiler's parameters, and can be challenging to comprehend or forecast as well as changing over time. Examples of optimizations that lessen the requirement for supplemental variables and can even lead to a quicker performance by eliminating round-about optimizations are loop-invariant code movements and return value optimization.