Transaction Processing in DBMS

Introduction to Transaction Processing

Database Management Systems (DBMS) can effectively manage data within a computer system by providing reliable and consistent management of transaction processing. This concept is critical in the world of data management, as it ensures that operations carried out on different databases are always genuine and accurate.

A database transaction is, simply another name for a logical unit of work most transactions involve multiple steps or operations that must either succeed fully and, therefore, be seen as one atomic command (‘all-or-nothing’). The output of transaction processing should observe the ACID properties of Atomicity, Consistency, Isolation, and Durability in order to ensure the reliability and integrity of database transactions.

Atomicity means that a transaction is considered an atomic unit, so all its operations are performed simultaneously or not at all. This ensures that situations do not occur where only a portion of operations within a transaction is accomplished, thus leaving the database in an inconsistent state.

Key Concepts of Transactions

In database management systems (DBMS), a transaction refers to a set of operations that should be performed as an undividable mechanism. Transactions are defined and governed by several key concepts that ensure reliable, consistent data management. Understanding this idea is critical for developing strong and reliable database systems.

Atomicity

The idea of atomicity is fundamental, and it means that the transaction cannot be parted. In case any part of the transaction fails, all parts of this particular trial are rolled back to preserve integrity within a database.

Consistency

Consistency ensures that a transaction takes the database from one valid state to another. During the transaction, the database must be in a consistent state. If a database is consistent before the execution of a transaction, it should be so after the completion of this process according to previously defined integrity constraints.

Isolation

Isolation deals with the concurrent execution of transactions. It ensures that the completion of any one transaction does not depend on others to avoid interference. Isolation levels such as Read Uncommitted, Read Committed, Repeatable, and Serializable dictate the separation between transactions to a certain degree in an attempt to strike that balance of performance versus consistency.

Durability

Firstly, durability ensures the permanence of committed transactions. Once a transaction is committed, it endures regardless of system failures or crashes. This is done through mechanisms such as transaction logs and recovery protocols, which allow the restoration of a database to a consistent state after failure.

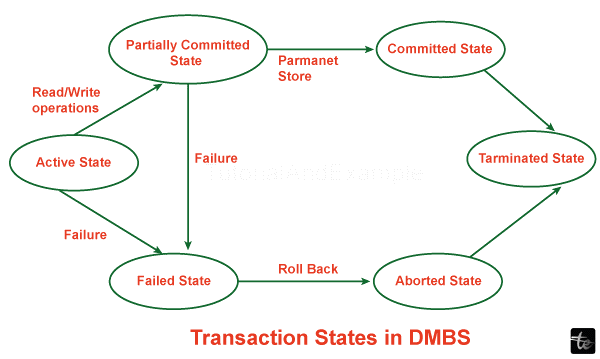

Transaction States

Transactions go through different states throughout their life cycle. These states are Active, in which a transaction is under execution, Partially Committed, when it has been completed but not fully committed, and Failed if an error arises, causing a rollback. Additionally, there is the committed or successful completion of a tradeshow.

Two-Phase Commit (2PC)

One way to coordinate distributed transactions is the Two-Phase Commit protocol (2PC). 2PC ensures consistency in distributed environments by guaranteeing that either all nodes commit to a transaction or roll it back.

Savepoints

Savepoints enable a transaction to be broken down into smaller, workable segments. If a mistake occurs, the transaction can be rolled back from its last savepoint not necessarily the initial level- thus offering more precise control.

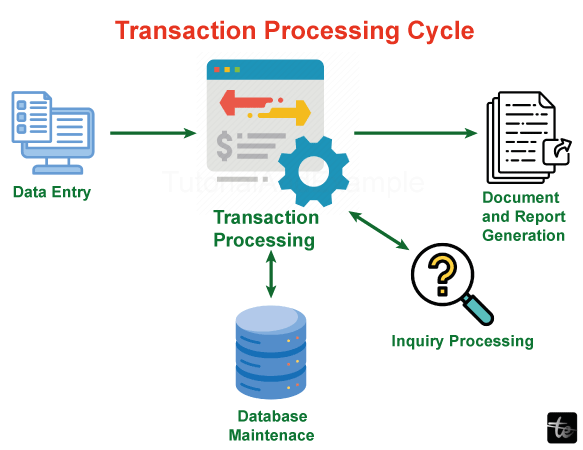

Transaction Management in DBMS

Database systems are also concerned with the management of transactions to ensure that operations can be processed reliably and consistently. It includes a group of principles and mechanisms through which data maintains integrity while creating a robust structure for database interactions.

Transaction Control Statements

These are BEGIN TRANSACTION, COMMIT, and ROLLBACK. The BEGIN TRANSACTION statement signifies the start of a transaction, while COMMIT completes the transaction if all operations are successful. On the contrary, ROLLBACK reverses the transaction if an error arises.

Concurrency Control

These mechanisms deal with concurrent execution of more than one transaction in order to maintain the integrity of data. Techniques such as locking, timestamp ordering, and optimistic concurrency control are methods that guarantee mutual independence of the transactions from one another, thus maintaining the integrity of the database.

Transaction Isolation Levels

Isolation levels determine the level of isolation between transactions. The DBMS provides various levels of isolation, including Read Uncommitted, Read Committed, RepeatableRead, and Serializable. These levels act to balance the tradeoff between transaction isolation and system performance.

Logging and Recovery

Transaction management is a vital aspect of logging. DBMS keeps logs for transactions where it records everything that was done during a transaction. These logs help recovery mechanisms restore the database to a consistent state in case of system failure, ensuring durability.

Savepoints and Nested Transactions

Savepoints allow transactions to be broken into smaller parts, thereby giving more specific control. A transaction can be rolled back from a specific state called savepoint in case an error occurs, then not to the start. Some DBMSs also allow nested transactions, which give the opportunity of a transaction within a transaction with its operations and rollbacks.

Distributed Transaction Management

Transactions become more complicated in distributed databases where data is partitioned across different places. 2PC is a protocol that guarantees the atomicity of distributed transactions. It ensures consistency within multiple nodes by coordinating transaction commits or rollbacks.

Deadlock Detection and Resolution

It should be noted that concurrent systems have built-in deadlocks where transactions cannot advance because of circular dependencies. DBMS utilizes deadlock detection and resolution algorithms that catch up on each other and break the stalemates so that transactions can proceed again.

Concurrency Control in DBMS

Database management systems (DBMS) implement concurrency control as an essential part of managing multiple transactions. It makes sure that running up transactions maintains the consistency and integrity of a database, avoiding conflicts per se and making data processing reliable.

Need for Concurrency Control

Handling numerous transactions simultaneously is critical for system performance and resource utilization. However, this creates the potential for conflicts, including lost updates, inconsistent reads, and data anomalies. Concurrency control mechanisms reduce these risks and help keep the database reliable.

Lock-Based Concurrency Control

Locking is a frequently implemented mechanism to control access data during transactions. It includes obtaining locks on data items to prevent other transactions from accessing the same items simultaneously. Some of the common types are shared locks for read operations and exclusive locks to write.

Two-Phase Locking (2PL)

Two-phase locking is one of the most popular concurrency control protocols. The protocol ensures that a transaction can’t release one lock before it acquires all the necessary locks, thus avoiding conflicts and holding on to consistency.

Optimistic Concurrency Control

Different from lock-based approaches, optimistic concurrency control assumes that conflicts are seldom. Transactions do not acquire locks, and only during the validation phase before committing, are conflicts detected. In case conflicts are identified, the system undoes the transactions and permits them to retry.

Multi-Version Concurrency Control (MVCC)

MVCC allows multiple transactions to observe different versions of data concurrently. Each deal operates on a database snapshot without the necessity for locks. This approach boosts concurrency by reducing contention for resources and increasing overall system performance.

Isolation Levels in Database Concurrency Control

The isolation levels in DBMS specify how well one transaction operation is isolated from the operations of other concurrent transactions. These levels ensure data consistency and integrity while allowing for concurrent execution of transactions. Different isolation levels provide different tradeoffs between data integrity and system performance.

Read Uncommitted

At the lowest isolation level, Read Uncommitted allows transactions to be able to read data changed by other yet incomplete transactions before they are committed. This level is also very risky in terms of dirty reads, non-repeatable reads, and phantom reads, so it’s good when data consistency is not really a major matter.

Read Committed

Read Committed makes it possible for a transaction to read only the data committed by other transactions. This level helps to avoid dirty reads but can still suffer from non-repeatable and phantom reads. It provides a good balance between data integrity and concurrency so that it can be used for most transactional applications.

Repeatable Read

Repeatable Read addresses the non-repeatable reads by ensuring a single transaction after reading an item of data four; no additional transactions can modify or delete that given read until before it is finished, but this could still experience phantom reads because other transactions are able to insert new records.

Serializable

Serializable is the isolation level that ensures complete isolation among transactions and it prevents dirty reads, non-repeatable reads, and phantom reads by locking the entire range of data accessed by a transaction until its completion while providing strong consistency for data. However, there are some drawbacks. If this high isolation strength is used, then contention increases, as sensitivity to long locks, leading to reduced productivity in concurrency levels.

Snapshot Isolation

Snapshot Isolation gives each transaction a snapshot of the database at its starting time. It results in very high concurrency when transactions read and write without blocking, even if there are conflicting requests. To lessen contention, however, may produce potential instances for violation screens caused by write-skew.

Distributed Transaction Processing in Database Management Systems

Distributed Transaction Processing (DTP) is a critical aspect of modern database management systems (DBMS) designed to handle transactions that span multiple interconnected databases or data stores.

Key Concepts of Distributed Transaction Processing

- Transaction Coordination: In a distributed environment, transactions can involve multiple databases, each having its transaction manager. Transaction coordination means that all participating databases will agree on the result of a transaction, whether it is committed or aborted.

- Two-Phase Commit (2PC): 2PC is a very popular protocol in DTP. It involves two phases: a ready phase, where all parties indicate readiness to commit, and a committed stage that involves the actual commitment or rollback in place. This guarantees atomicity for distributed components.

- Distributed Transaction Manager (DTM): DTM is one of the central parts responsible for coordinating and managing transactions across distributed resources. It manages the running of the 2PC protocol and maintains consistency in the distributed system.

- Global Transaction Identifier (GTID): Distributed transactions get assigned a unique identifier, GTID – this helps in tracking and coordinating the progress of the transaction across multiple nodes.

Challenges in Distributed Transaction Processing

- Consistency and Isolation: Problems with maintaining consistency and isolation in a distributed environment arise due to the possibility of conflicting updates across many databases. Ensuring that transactions meet ACID properties becomes more challenging.

- Network Failures: Network failures can cause a lack of communication between nodes in distributed systems. Managing transactions under network partitions requires strong protocols and robust error-handling mechanisms.

- Concurrency Control: One of the largest issues is coordinating parallel transactions across distributed nodes without sacrificing performance. Effective concurrency control mechanisms should be in place to avoid conflicts and ensure there are no issues with correctness.

- Scalability: Scalability is an important aspect as the number of distributed nodes increases. One persistent challenge is to ensure that the DTP system will efficiently manage a growing number of transactions while maintaining its performance level.

Database Transactions vs. Real-World Analogy

Conceptualizing Database Transactions

- Atomicity: In databases, atomicity makes sure a transaction is considered an isolated individual yet unsplittable unit. Similarly, imagine a financial transaction in which money moves between accounts. Atomicity ensures that either the whole transfer occurs, but it does not middle state.

- Consistency: This database consistency means that a system would move from one valid state to another after a transaction. When thinking about a librarian updating book inventory. The consistency requirement involves that the inventory count accurately accounts for new entries or cancellations of books.

- Isolation: Isolation in databases keeps transactions from affecting one another. This is akin to when a chef prepares several dishes at once in the kitchen. Each of such dishes (transactions) takes its course, one after another, without being influenced by the others.

- Durability: Durability means that when a transaction is committed, the effects of it prevail despite failures. If, say, you save a document – it is supposed to be permanent like any other database transaction.

Real-World Analogy: A Banking Transaction Scenario

- Atomicity: Suppose you transfer funds from one account to another in a banking operation. Atomicity provides that if any part of the transaction fails (for instance, a lack of funds), then the whole operation is rolled back, leaving both accounts unchanged.

- Consistency: Imagine refreshing personal details at a government office. The consistency requirement means that after the update, information is accurate and consistent with determined standards.

- Isolation: Imagine several people drawing their money out of ATMs at the same time. Isolation ensures that each person’s seclusion (transaction) can occur on its own without the knowledge of or interference from others.

- Durability: Durability in the realm of purchase means that once you settle payment, it is securely stored and marked whereby after any system failures they do not affect your ownership of the good.

Conclusion

In conclusion, the process of transaction processing in DBMS is an indispensable element for reliable and effective data management. It provides the integrity, consistency, and reliability of database transactions, which is crucial to many industries. As technology develops, the implementation of cutting-edge innovations such as in-memory databases and blockchain combined with serverless architectures is predicted to improve transactional efficiency. The future landscape will be a dynamic one marked by trends like continuous intelligence and quantum database processing. Organizations must emphasize efficient transaction management in order to contend with the intricacies of current data ecosystems.