Machine Learning Gesture Recognition

Strong attempts have recently been made to create user-computer interfaces that are intelligent, natural and based on human gestures.

Both human and computers may use gestures as an interactive design. Therefore, such gesture-based interfaces have the potential to not only replace existing interface devices but also to increase their usefulness.

What are Gestures?

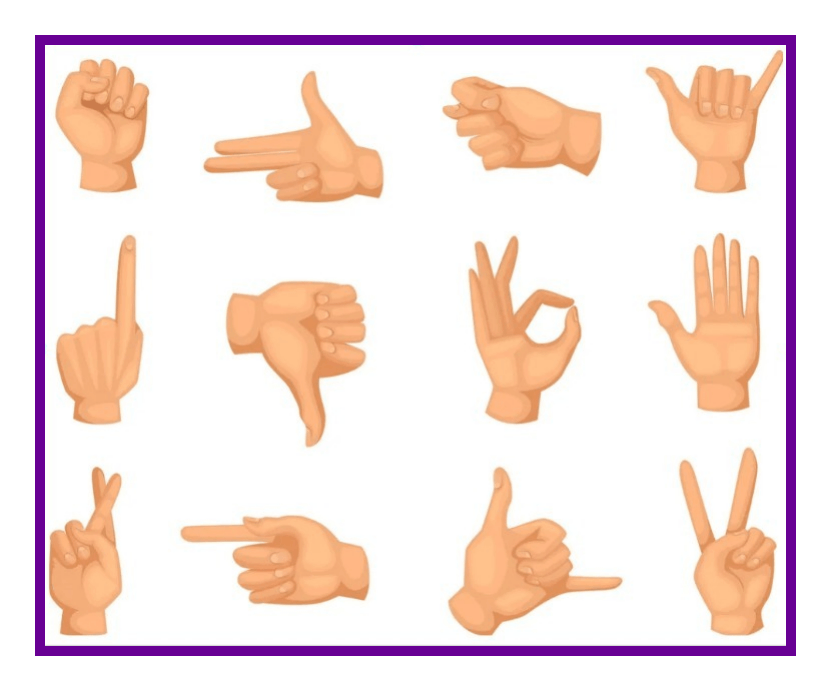

Gestures can come from any body movement or mood, although they usually start with the hand or the face. With the use of gesture recognition, a computer may be able to comprehend human body language. The demand for text interfaces and GUIs (Graphical User Interface) has decreased as a result. An action that must be observed by another person and must communicate some sort of message is known as a gesture.

A gesture is typically thought of as a movement of a body component, particularly a hand or the head, to convey a thought or message. In the modern world, gesture recognition technology is considerably more recent.

Gesture Recognition

There is a lot of ongoing research in this area right now, but there aren't many publicly accessible implementations. For the purpose of perceiving gestures and commanding robots, many methods have been devised. A common approach for identifying hand motions is the glove-based technique. It makes use of a glove-mounted sensor that records hand motions precisely.

Given that they are the most fundamental and expressive means of human communication, gestures are helpful in computer engagement. Gaming gesture interfaces based on hand- and body-gesture technologies must be planned to be both popular and profitable. Each gesture-recognition algorithm depends on the user's cultural background, the application area, and the surroundings, hence one approach for automatic hand gesture identification is not appropriate for all applications.

Structure of Gesture Recognition Technology

First, we need to classify the gesture into two types:

- Offline Gestures: When the user interacts with the item, processed motions. The motion used to activate a menu is one illustration.

- Online Gestures: Direct manipulation of hand movements. They may rotate or scale a physical item.

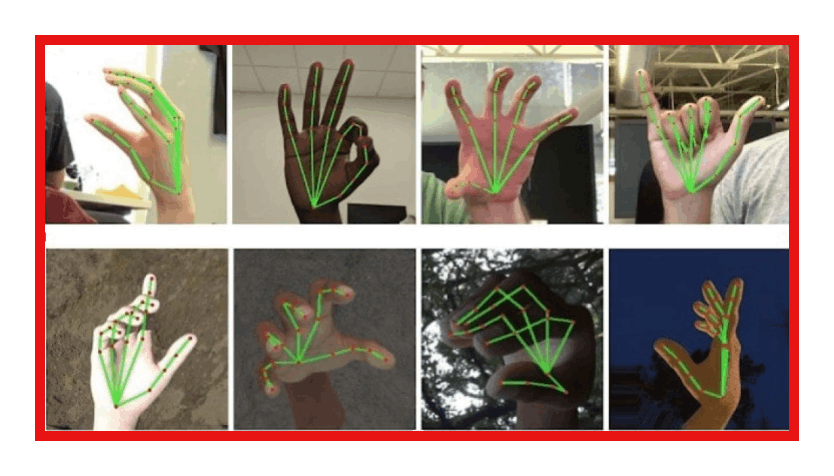

The ability to track a person’s movements and determine what gestures they may be performing can be achieved through various tools. Although there is a large amount of research done in image/video-based gesture recognition, there is some variation within the tools and environments used between implementations. Some of the tools may include wired gloves, Depth-aware cameras, Stereo cameras, Gesture-based controllers, and also Radar. These controllers act as an extension of the body so that when gestures are performed, some of their motion can be conveniently captured by the software. An example of emerging gesture-based motion capture is skeletal hand tracking, which is being developed for virtual reality and augmented reality applications.

The method for understanding a gesture might be done in several ways depending on the type of input data. The majority of the methods, however, rely on crucial pointers that are represented in a 3D coordinate system. Depending on the quality of the input and the algorithm's methodology, the gesture can be accurately recognized based on the relative motion of these.

Body motions must be categorized based on common characteristics and the message they may be conveyed to be understood. For instance, a word or phrase is represented by each gesture in sign language. In "Toward a Vision-Based Hand Gesture Interface," Quek suggested a taxonomy that appears to be particularly suitable for Human-Computer Interaction. He offers three interactive gesture systems—Manipulative, Semaphoric, and Conversational—to capture the full range of motions. A 3D model-based and an appearance-based method for gesture detection are distinguished in certain studies. The most common approach uses 3D data of major body parts' components to determine various crucial factors, such as joint angles or palm position. On the other hand, appearance-based systems interpret directly from photos or videos, which usually include:

- Gesture or Data Acquisition Stage Image Collection: During this step of data collection, hand, body, and facial motions are noted and categorized.

- Stage of gesture image processing: The primary gesture properties are recorded in this stage using methods including edge detection, filtering, and normalization. It incorporates the input gesture into the gesture recognition model.

- Stage of Image Tracking: When the sensors record the direction and location of the object making the gestures, picture tracking occurs after gesture image pre-processing. This can be done with the use of one or more trackers, such as magnetic, optical, acoustic, inertial, or mechanical trackers.

- Stage of the recognition: The recognition stage, which is sometimes seen as the last step in gesture control in VR systems, occurs last but certainly not least. The command or the gesture's meaning is stated after a successful feature extraction that occurs after image tracking and stores the gesture's recognized features in a system utilizing complicated neural networks or decision trees. Since the gesture has been detected, the classifier can associate every input from a test movement with the appropriate gesture class.

Gesture Recognition Applications

- Conversation with computer: Imagine a scenario where someone creating a presentation could add a quotation or change a picture with a flick of the wrist as opposed to a mouse click. Shortly, we will be able to interact in virtual reality just as effortlessly as we can in the real world, using our hands to do tiny, complex actions like picking up a tool, pressing a button, or squeezing a soft item in front of us. The technology of this nature is still being developed. However, the computer scientists and engineers working on these projects assert that they are close to making hand and gesture detection systems used for widespread use, much the way many people already use speech recognition to dictate texts or computer vision to identify faces in photographs.

- Medical Operation: Gestures can be used to manage resource allocation in healthcare facilities, communicate with medical equipment, operate visualization displays, and support disabled individuals throughout their rehabilitation. Some of these ideas have been applied to better medical practices and systems, such as a "come as you are" technology that lets surgeons operate a laparoscope by making the necessary facial motions without the use of vocal commands or hand or foot switches. Simply hand gestures into doctor-computer interfaces, detailing a computer-vision system that enables surgeons to do common mouse actions using hand gestures that meet the "intuitiveness" condition, such as pointer movement and button pressing.

- Control for games with gestures: Due to the enjoyable nature of the interaction, computer games are a particularly technologically viable and financially profitable field for novel interfaces. Users are excited to experiment with new interface paradigms since they are probably involved in a difficult gaming experience. Control is given through the user's fingertips on a multi-touch device. The location of the touch and the number of fingers utilized are more crucial than whatever finger makes contact with the screen. The "fast-response" criterion applies to computer vision-based, hand-gesture-controlled games where the system must react swiftly to user motions. In contrast to applications (like inspection systems) without a real-time requirement, where recognition performance is given top attention, games require computer-vision algorithms to be reliable and effective. Therefore, tracking and gesture/posture identification with high-frame-rate image processing should be the main areas of research.

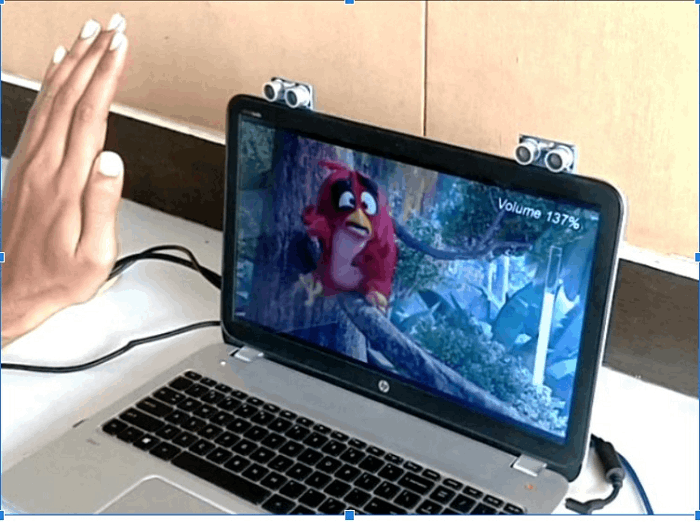

- Hand gestures are used to operate home electronics like the TV and MP3 player: Electronic device control through hand gestures is becoming more and more popular today. The hand gesture detection algorithm and the associated user interface are the main concerns of most electronic gadgets. A hand gesture-based remote can replace all other household remote controls and carry out all of their duties. Appliances like the TV, CD player, air conditioner, DVD player, and music systems are typically controlled via remote controls in houses. Using a remote control to turn on and off lights, open doors, etc. One Universal Remote may be used to operate all of these devices. Although the technology (infrared transmission and ON/OFF modulation in the range of 32–36 kHz) is synchronized for all remote controls, there is no established standard for the coding structure for data transfer. By adhering to a predetermined code, communication between the remote and the appliances is established.

- Gesture control car Driving: It's as easy as it sounds, you use your hands to operate the car's controls instead of turning your head to look at the road. Even yet, this achievement is undoubtedly made possible by several clever technologies that operate in the background. Modern eye-tracking cameras measure where the driver's eyes are focused in one of them, while 3D hand gesture recognition sensors track hand movement in the other. So, without taking their eyes off the road, a motorist might glance to select the radio with their eyes and then change the station with a hand motion. The hand motions utilized to operate the system are ones we naturally do every day, such as raising or lowering the hand, pointing, swiping left or right, turning clockwise or counterclockwise, and pinching or spreading. This would make it possible for you to carry out tasks like scrolling through a phone contact list, altering the Sat-location, Nav's going back to an earlier tune or raising the temperature in the car.

- Communication: Computer-generated settings that imitate a scenario or event, either inspired by reality or constructed out of imagination, are known as virtual reality and immersive reality systems. Due to their ability to stimulate the user's physical presence through movement and user interaction, these reality systems are frequently referred to as hybrid realities. The senses of sight, hearing, touch, and even smell may be included in this. Only certain gadgets or VR head-mounted displays, which frequently call for the usage of pointing devices, allow a user to interact with a VR world. However, with virtual reality, controlling tools that may be used covertly are highly desirable, such as voice commands, lip reading, facial expression interpretation, and hand gesture recognition.

Gesture Recognition Using Machine Learning

Here, we'll build a machine learning system that can identify several hand gestures in photos, including the fist, palm, thumb-showing, and others.

Dataset

This project makes use of the Hand Gesture Recognition Database from Kaggle (reference provided below). It includes 20,000 pictures of various hands and hand movements. The dataset contains a total of 10 hand motions made by 10 distinct individuals. There are 5 male and 5 female participants. The Leap Motion hand-tracking gadget was used to take pictures.

link:https://www.kaggle.com/datasets/gti-upm/leapgestrecog

| Hand Gesture | Label used |

| Thumb down | 0 |

| Palm (Horizontal) | 1 |

| L | 2 |

| Fist (Horizontal) | 3 |

| Fist (Vertical) | 4 |

| Thumbs up | 5 |

| Index | 6 |

| OK | 7 |

| Palm (Vertical) | 8 |

| C | 9 |

Note: This project was developed using the Google Colab environment.

Gathering and Exploring Data

#Importing the file and module that are required for the project

%matplotlib inline

from google.colab import files

import os

import tensorflow as tf

from tensorflow import keras

import numpy as np

import matplotlib.pyplot as plt

import cv2

import pandas as pd

from sklearn.model_selection import train_test_split # Helps with organizing data for training

from sklearn.metrics import confusion_matrix # Helps present results as a confusion-matrix

# you have to unzip the images

!unzip leapGestRecog.zip

# path for all the images

imagepaths = []

#traversing all the files and other sub-directories , so that we can save the path to images that are there.

for root, dirs, files in os.walk(".", topdown=False):

for name in files:

path = os.path.join(root, name)

if path.endswith("png"):

imagepaths.append(path)

print(len(imagepaths))

Output:

20000

#Creating functions so that we can use them for debugging.

#It plots the image also.

def plot_image(path):

img = cv2.imread(path)

img_cvt = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)

print(img_cvt.shape)

plt.grid(False)

plt.imshow(img_cvt)

plt.xlabel("Width")

plt.ylabel("Height")

plt.title("Image " + path)

# Plotting the first image from our image paths array

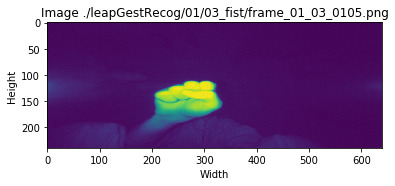

plot_image(imagepaths[0])

Output:

(240, 640)

After loading the images and making sure everything is as planned, we must now prepare the images for the algorithm training. Both the labels and the photos must be loaded into separate arrays, X and y, respectively.

X = [] # Image data

y = [] # Labels for it

# looping and loading the images from the path

for path in imagepaths:

img = cv2.imread(path) #reading image and assigning it as np.array

img = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY) #Converting it into the correct color scheme

img = cv2.resize(img, (320, 120)) # Compressing or reducing the size to save time

X.append(img)

# Label processing

category = path.split("/")[3]

label = int(category.split("_")[0][1]) # We need to convert 10_down to 00_down, or else it crashes

y.append(label)

# Turn X and y into np.array

X = np.array(X, dtype="uint8")

X = X.reshape(len(imagepaths), 120, 320, 1) # Needed to reshape so CNN knows it's different images

y = np.array(y)

print("Images loaded: ", len(X))

print("Labels loaded: ", len(y))

print(y[0], imagepaths[0]) # Debugging the data

Output:

Images loaded: 20000

Labels loaded: 20000

3 ./leapGestRecog/01/03_fist/frame_01_03_0105.png

We can divide our data into a training set and a test set using Scipy's train test split function.

ts = 0.3 # percentage of the photos that we intend to evaluate. Training is done with the rest.

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=ts, random_state=42)

Creation of the Model

# Import of keras model and hidden layers for our convolutional network

from keras.models import Sequential

from keras.layers.convolutional import Conv2D, MaxPooling2D

from keras.layers import Dense, Flatten

# Using TensorFlow backend.

#model's construction

model = Sequential()

model.add(Conv2D(32, (5, 5), activation='relu', input_shape=(120, 320, 1)))

model.add(MaxPooling2D((2, 2)))

model.add(Conv2D(64, (3, 3), activation='relu'))

model.add(MaxPooling2D((2, 2)))

model.add(Conv2D(64, (3, 3), activation='relu'))

model.add(MaxPooling2D((2, 2)))

model.add(Flatten())

model.add(Dense(128, activation='relu'))

model.add(Dense(10, activation='softmax'))

#Configuration of the model for training

model.compile(optimizer='adam', # Optimization routine

loss='sparse_categorical_crossentropy', # Loss function

metrics=['accuracy']) # List of metrics that the model will consider while being trained and tested.

# validates the model after training it for a specified number of epochs (dataset iterations).

model.fit(X_train, y_train, epochs=5, batch_size=64, verbose=2, validation_data=(X_test, y_test))

Output:

Train on 14000 samples, validate on 6000 samples

Epoch 1/5

- 305s - loss: 0.5644 - acc: 0.9133 - val_loss: 0.0096 - val_acc: 0.9968

Epoch 2/5

- 302s - loss: 0.0169 - acc: 0.9961 - val_loss: 0.0145 - val_acc: 0.9958

Epoch 3/5

- 301s - loss: 0.0045 - acc: 0.9987 - val_loss: 0.0015 - val_acc: 0.9992

Epoch 4/5

- 301s - loss: 6.5339e-05 - acc: 1.0000 - val_loss: 4.1817e-04 - val_acc: 0.9998

Epoch 5/5

- 305s - loss: 1.6688e-05 - acc: 1.0000 - val_loss: 3.7710e-04 - val_acc: 0.9998

<keras.callbacks.History at 0x7f8c5fb09c18>

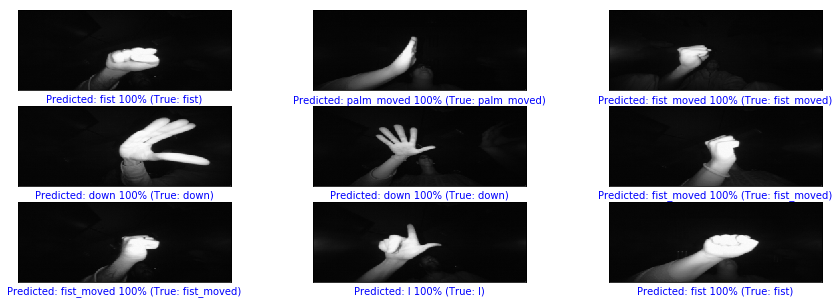

Testing The Model

We need to determine whether the model is robust now that it has been assembled and trained. We run the model first, to determine the correctness and review it. Then, in order to verify everything, we generate predictions and plot the photos while using both the expected and actual labels. This allows us to see how our algorithm is performing.

Later, we create a confusion matrix—a particular type of table arrangement that makes it possible to see how an algorithm performs.

test_loss, test_acc = model.evaluate(X_test, y_test)

print('Test accuracy: {:2.2f}%'.format(test_acc*100))

Output:

6000/6000 [==============================] - 39s 6ms/step

Test accuracy: 99.98%

predictions = model.predict(X_test) # Testing the model over the test set

np.argmax(predictions[0]), y_test[0]

Output:

(8, 8)

# Function for plotting labels and photos for validation.

def validate_9_images(predictions_array, true_label_array, img_array):

# array for attractive printing, followed by figure size

class_names = ["down", "palm", "l", "fist", "fist_moved", "thumb", "index", "ok", "palm_moved", "c"]

plt.figure(figsize=(15,5))

for i in range(1, 10):

# assigning variables

prediction = predictions_array[i]

true_label = true_label_array[i]

img = img_array[i]

img = cv2.cvtColor(img, cv2.COLOR_GRAY2RGB)

# Plot in a structred way

plt.subplot(3,3,i)

plt.grid(False)

plt.xticks([])

plt.yticks([])

plt.imshow(img, cmap=plt.cm.binary)

predicted_label = np.argmax(prediction) # to obtain the predicted label's index, use prediction

#Based on whether the forecast was accurate, change the title's colour.

if predicted_label == true_label:

color = 'blue'

else:

color = 'red'

plt.xlabel("Predicted: {} {:2.0f}% (True: {})".format(class_names[predicted_label],

100*np.max(prediction),

class_names[true_label]),

color=color)

plt.show()

validate_9_images(predictions, y_test, X_test)

Output:

# H for Horizontal

# V for Vertical

pd.DataFrame(confusion_matrix(y_test, y_pred),

columns=["Predicted Thumb Down", "Predicted Palm (H)", "Predicted L", "Predicted Fist (H)", "Predicted Fist (V)", "Predicted Thumbs up", "Predicted Index", "Predicted OK", "Predicted Palm (V)", "Predicted C"],

index=["Actual Thumb Down", "Actual Palm (H)", "Actual L", "Actual Fist (H)", "Actual Fist (V)", "Actual Thumbs up", "Actual Index", "Actual OK", "Actual Palm (V)", "Actual C"]

Output:

| Predicted Thumb Down | Predicted Palm (H) | Predicted L | Predicted Fist (H) | Predicted Fist (V) | Predicted Thumbs up | Predicted Index | Predicted OK | Predicted Palm (V) | Predicted C | |

| Actual Thumb Down | 604 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| Actual Palm (H) | 0 | 617 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 |

| Actual L | 0 | 0 | 621 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| Actual Fist (H) | 0 | 0 | 0 | 605 | 0 | 0 | 0 | 0 | 0 | 0 |

| Actual Fist (V) | 0 | 0 | 0 | 0 | 596 | 0 | 0 | 0 | 0 | 0 |

| Actual Thumbs up | 0 | 0 | 0 | 0 | 0 | 600 | 0 | 0 | 0 | 0 |

| Actual Index | 0 | 0 | 0 | 0 | 0 | 0 | 568 | 0 | 0 | 0 |

| Actual OK | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 586 | 0 | 0 |

| Actual Palm (V) | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 591 | 0 |

| Actual C | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 611 |

Conclusion

We may draw the conclusion that our algorithm correctly categorises various hand gestures photos with enough confidence (>95%) based on the model from the findings reported in the previous section.

Several features of our challenge have a direct impact on how accurate our model is. The visuals are crisp and background-free, and the motions displayed are rather distinct. Additionally, there are a sufficient number of photos, which strengthens our model. The disadvantage is that we would likely require additional data for various challenges in order to properly influence the parameters of our model. A deep learning model is also particularly challenging to comprehend because of its abstractions.