Train and Test datasets in Machine Learning

Machine learning is the type of practice that splits the available data or information into two different types of sets which are known as the training set and the test set. These sets are performed mainly to examine the trained model's performance and generalization ability.

What is Train Dataset?

Whenever we define a training set it can be referred to as the set which is used to train the model. During this duration of time, various data are used for the model to be trained. In order to modify its internal settings to enhance the performance, the model makes use of the input features and their accompanying labels( that is in supervised learning) or patterns in the data (in unsupervised learning).

A trained model's performance is determined by using the test set, which is a separate portion of the dataset. Although it is labeled data, the model hasn't come across this data during the training phase. The data is identical to the training set.

The training dataset's goal is to help the machine learning algorithm discover trends and connections between the input data and the desired result.

The training dataset has to be an accurate representation of the data the model will use in the actual world. Every variable and degree of complexity that the model is likely to experience in the real world must be represented by a wide range of instances.

The size of the training dataset is an important consideration in machine learning. The size of the training dataset often improves how well the machine learning model works. Trade-offs must also be considered, such as how computational resources are used and the risk of overfitting the model to the training dataset.

What is Test Dataset?

Whereas when we define a test set it is referred to as the test which is used to assess the model's performance following training. The model used has never come across this kind of data previously during the training set. One can assess how well the model can perform on brand-new, unexpected data by analyzing its predictions on the test set.

The test set's goal is to judge how well the model generalizes and performs with new data. We may determine the model's accuracy, precision, recall, F1 score, and any other pertinent metrics by assessing it on the test set for assessing its predicting skills.

The size of the training dataset as a whole and the difficulty of the trained model both have an impact on the size of the test dataset. It is common practice to use 80% of the data for training and 20% for testing. The percentage may change, though, based on the particular issue and the information at hand.

It's vital to remember that the test dataset should only be utilized for assessment and not for model choices or model optimizations. Based on the model's performance on the training dataset, any adjustments to the model should be made.

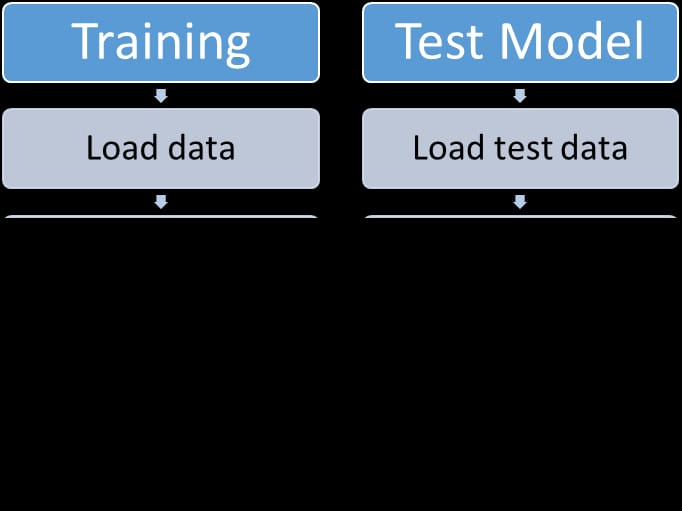

Steps for splitting of Datasets

There are a few steps that are used in the process of splitting data in the training set and data set and they are listed below:

1. Preparation of data:

Here the data is clean and preprocesses such as handling of missing values, standardizing the features, and further performing other steps which are required to preprocessed.

2. Data splitting:

Whenever the data is being split, it is split into two sets and they are the training set and the test set. These sets are split on the bases of their class and their labels on the original data set.

3. Training:

During this process, the train set is used to train the machine learning model. Here we have to give the model the input characteristics and the labels in supervised learning, and then discover the trends and connections in the data.

4. Evaluation:

Once the model is being trained now we move to the other step which is to evaluate the performance by using the test set. In this process, the feature input is moved from the test set through the model process such that by making predictions and comparison with the original label.

The Need for Datasets

There is various reasons why we need datasets and they are:

1. Model evaluation: the vital purpose of the test set is to assess the performance of the train set. By performing such sets one can ensure generalize data and a forecast view.

2. Overfitting detection: this type of detection occurs when the model learns about the noise details leaving aside the underlying patterns and their connections. One can easily predict whether the model is undergoing overfitting detection if the performance in the test set is worse when compared to the train set and this shows that the machine model is not generalized well and overfit the training data.

3. Hyperparameter: Machine learning models include hyperparameters which are needed to modify and to improve the performance. Hyperparameters are referred to the settings that are chosen by the user prior training rather than that are learned from the data. By dividing the data, it may put aside a unique testing set that can be used to adjust the hyperparameters. The validation set helps in evaluating various hyperparameter setups and also in choosing the one which produces the best results. Cross-validation is a term that is frequently used to describe this procedure.

4. Estimate unbiased performance: Unbiased Performance assessment: You may get an unbiased assessment of the model's performance by utilizing a different test set that it has never seen before. Compared to evaluating the model on the training set or applying other ad hoc techniques, this estimate is more accurate. It offers a more accurate prediction of the model's performance on brand-new, untested data.

Difference between Train Set and Test Set

The differences between the two datasets are:

1. Data Usage:

The train set is used in order to train the model. The model learns from the data by adjustments made on the basis of internal iterative parameters. Whereas, the other set which is referred to as the test set is used for the evaluation process, of the trained models. Through this process one can understand how the models are generalized.

2. Data exposure:

The model is exposed by the training the set during its process, which means that the training process used such data for its internal parameters. Whereas in the test set the data remain unseen till the evaluation stage.

3. Label Availability:

The two sets of the dataset have labeled data, this allows the performance of the models to evaluate. However, at the time of the test set process, these labels are not allowed to use during the training, guaranteeing that the model only uses the patterns it has learned from the training data to base its predictions.

4. Evaluation Metrics:

For the training and test sets, many metrics for assessment have been implemented. The effectiveness of the model is frequently evaluated during training using measures like loss functions, accuracy, or validation metrics. To gauge how well the model works on unobserved data in the test set, evaluation measures like accuracy, precision, recall, F1 score, and others are used.

Conclusion

It is essential to divide the dataset into a train set and test set in order to accurately judge how well the model generalizes to new data. When the splitting of data takes place between training and data set, this shows how the model is going to make forecasts on new data and also in generalizing the unseen data. This entire procedure aids in the discovery of overfitting, which occurs when a model performs well in the training set but performs completely differently in contrast to the test set. The main contrast between training data and testing data is that the former is a subset of the original data that is used to train the machine learning model, whilst the latter is used to judge the accuracy of the model.