Unsupervised Machine Learning Algorithms

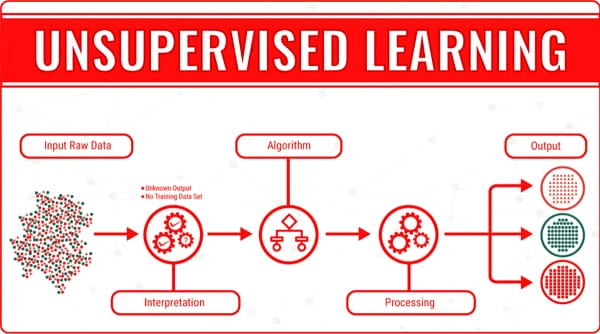

"Unsupervised machine learning" is a type of machine learning which scans dataset for relationships, structures, and patterns without the use of labels or expected outcomes. In other words, it is discovering underlying patterns or meaningfully arranging data without the use of knowledge or direction.

Unsupervised learning methods use unlabeled data, in contrast to supervised learning, which gives the algorithm examples that have been labeled for it to learn from. Based on essential similarities or differences between data points, these algorithms examine the data and find patterns or groups. An effective collection of techniques are available for examining and collecting useful information from unlabeled data through the use of unsupervised machine learning.

Numerous tasks, including customer segmentation, text and picture analysis, recommender systems, recognition of anomalies, and more, maybe accomplished via unsupervised learning. By assessing and studying the underlying structure of data, unsupervised learning enables us to gain knowledge, make predictions, or improve decision-making in a range of disciplines. Some of the common techniques used in unsupervised learning are:

i) Clustering: Based on their traits or characteristics, clustering algorithms group comparable data points together. The main objective is to increase the similarities between clusters and also the dissimilarity between them.

ii) Reducing the variable numbers or features in a dataset while maintaining its useful structure is referred to as "dimensionality reduction." This can help in eliminating noise or pointless elements from high-dimensional data visualization.

iii) Anomaly detection methods find data items that significantly depart from expected behavior or typical patterns. These methods are employed to find odd patterns, outliers, or illegal activity in a dataset.

Advantages of Unsupervised Machine Learning

Some of the advantages of unsupervised learning are:

1. Unsupervised learning algorithms are capable of finding hidden links, structures, and patterns in different types of datasets. These algorithms can uncover significant facts that might not be obvious through manual inspection by studying the data without using specified labels or outcomes. This may result in fresh insights and a better comprehension of the underlying facts.

2. No Labeling Necessary: Unsupervised learning functions with unlabeled data, compared to supervised learning, which requires labeled data for training. This removes the requirement for human labeling, which in certain cases may be time-consuming, costly, or even impractical. Unsupervised learning enables us to make use of huge amounts of potentially freely available unlabeled data.

3. Unsupervised learning techniques are adaptable to a range of data kinds and domains, which makes them flexible. They are helpful for a number of real-world problems since they can deal with a variety of data kinds, such as numerical, category, or text data. Before using supervised learning techniques, unsupervised learning may be used as a stage of preprocessing to extract important characteristics or decrease dimensionality.

4. Scalability: Algorithms for unsupervised learning frequently adapt well to big datasets. The computational complexity is often lower than supervised learning approaches since they do not rely on labeled instances. As a result, unsupervised learning can effectively manage big data scenarios, providing analysis and insights from massive volumes of data.

5. Anomaly Detection: For anomaly detection, unsupervised learning approaches are very helpful. These algorithms are able to identify odd or unusual data points that differ from the expected patterns by learning the typical patterns or behavior of a dataset. This is helpful in applications like fraud detection, network intrusion detection, quality control in manufacturing, and others where spotting abnormalities is essential.

6. Unsupervised learning offers a way to examine and comprehend the structure of a dataset. Data Exploration and Preprocessing. It helps in data preparation activities including dimensionality reduction, feature extraction, and data cleansing. Unsupervised learning algorithms can disclose critical characteristics or connections that might direct further analysis or decision-making.

Disadvantages of Unsupervised Machine Learning

Some of the disadvantages are:

1. Lack of Objective Evaluation: Because unsupervised learning works with unlabeled data, it might be difficult to assess the algorithm's performance in an impartial manner. It becomes challenging to evaluate the correctness or efficacy of the algorithm's output in the absence of predetermined outcomes or labels. Unsupervised learning evaluation measures are frequently random or domain-specific, making it challenging to quantify the algorithm's performance.

2. Interpretability and Comprehensibility: Unsupervised learning algorithms may provide outcomes that are challenging to understand or interpret. Although they can spot trends or clusters in the data, the underlying causes or justifications for those trends might not be immediately clear. The understanding acquired by unsupervised learning may be less helpful as a result of this lack of interpretability, and it may be necessary to do more research or consult experts in the relevant fields to understand the findings.

3. Dependency on Initial Parameters: Setting initial assumptions or parameters is frequently required for unsupervised learning algorithms. These factors may have a big influence on the final results. It can be difficult to choose the right beginning settings, and one might need to experiment or have domain knowledge. In addition, different beginning parameter sets can produce various results, making the procedure a bit random and perhaps affecting the outcomes.

4. Unsupervised learning methods are sometimes vulnerable to errors or noisy data points. Outliers can skew previously identified patterns or clusters, producing less precise findings. For outliers to have the least negative influence on the performance of the algorithm, it is critical to efficiently preprocess the data and handle them.

5. Results subjectivity: Since unsupervised learning relies on unsupervised data exploration and analysis, results bias may be introduced. Unsupervised algorithms can identify clusters or patterns, but the meaning of these patterns might be subjective because different researchers may see or interpret the data differently. Due to this subjectivity, different interpretations of the same dataset's findings may result.

Types of Unsupervised Machine Learning Algorithms

There are two methods that allow for pattern recognition, data exploration, and meaningful representation of unlabeled data and such are named as clustering and dimensionality reductions, and these provide the basis for unsupervised learning.

There are two different categories of machine learning algorithms:

Clustering algorithms: which are based on their similarities in nature or patterns in the data, clustering algorithms that try to group comparable data points together. While maximizing the dissimilarity between clusters, the objective is to maximize the similarity between clusters. The clusters that are present in the data are automatically recognized by clustering algorithms without the need for labels or previous experience.

Numerous techniques for clustering exist, including:

i) Using the mean distance between data points and cluster centroids, K-means clustering divides the data into K groups.

ii) By repeatedly merging or dividing clusters based on similarity measurements, hierarchical clustering builds a hierarchy of clusters.

iii) Density-Based Spatial Clustering of Applications with Noise, or DBSCAN It locates clusters where dense regions are divided by poor regions based on density connectivity.

iv) Customer segmentation, picture recognition, detection of anomalies, and recommendation systems all frequently use clustering.

Dimensionality Reduction Algorithms:

This algorithm’s approaches for reducing the number of variables or features in a dataset while maintaining its useful structure are known as "dimensionality reduction" approaches. As a result, the data representation is made simpler, unnecessary or duplicate characteristics are removed, and the problem of dimensionality is addressed. Dimensionality reduction can boost the effectiveness of following learning algorithms, make visualization easier, and increase processing efficiency.

Techniques for dimensionality reduction that are frequently used include:

i) By identifying orthogonal directions (principal components) that represent the majority of the variation in the data, principal component analysis (PCA) reduces the dimensions of the data.

ii) The main use of t-SNE (t-Distributed Stochastic Neighbor Embedding) is to visualize high-dimensional data in a lower-dimensional space while maintaining the local organization and its neighborhood links.

Autoencoders are neural network designs that may be trained to learn how to rebuild input data from a compact representation, therefore lowering dimensionality.

In disciplines like extraction of features, data visualization, and picture and text analysis, dimension reduction proves to be advantageous.

Conclusion

Numerous tasks, including customer segmentation, text and picture analysis, recommender systems, anomaly detection, and more, maybe accomplished through unsupervised learning. Unsupervised learning helps us to obtain insights, make predictions, or enhance decision-making across a variety of fields by analyzing and understanding the underlying structure of data. Unsupervised learning techniques like clustering and dimensionality reduction are extremely helpful for tasks like data exploration, pattern recognition, customer segmentation, and getting ready for supervised learning.

Overall, by generating insightful conclusions and knowledge discovery from unlabeled data, unsupervised learning enhances supervised learning. Unsupervised learning assists in an improved understanding of complicated datasets and supports data-driven decision-making and problem-solving in a variety of sectors by utilizing the data's inner structure and patterns.