Cache Line and Cache Size in Operating System

A cache line is a fundamental unit of memory, and its plays a crucial role in the performance of computer systems. Cache memory uses cache lines to store frequently accessed information and is also used to improve the performance of computer systems by reducing access time.

Every cache line is mapped with its associated core line. A cache line is essential because they are used to minimize the power utilization of the system, and it is also used to store data in memory.

Cache memory is a high-speed memory, which is used to maintain the performance of the systems. It is a costly memory, and it is used to store data and instructions, so that CPU can quickly accesses them. When the CPU access data and instructions in the system, firstly, it checks the cache memory. If data and instructions are present in the cache memory, it quickly accesses without the help of the main memory. It minimizes the time needed to fetch the data, and if the information is not present in cache memory, then fetched data from the main memory, which requires more time.

Characteristics of Cache Lines

1- Size: Cache lines are typically fixed in size, but the size of the different cache lines depends on their architecture and some of the cache lines are 64 or 128 bytes.

2-Cache Tags: A tag is a unique identifier used to identify the locations of cache lines in the main memory. The tag is used to determine that if the cache line contains the data or instruction that the processor is looking for.

3-Associativity: Cache lines are arranged into different levels of Associativity as set-associative, direct-mapped, or fully associative. And this Associativity affects the number of cache lines stored in the memory.

4-Coherence: Coherence is one of the critical factors in a cache line, meaning that all processors must have a consistent view of the cache memory. And various coherence protocols are MOESI and MESI, which ensure that all processors have the correct information.

5- Placement: Cache line placement can be controlled to some extent through memory alignment techniques, which ensure that data items are stored at addresses that are multiples of the cache line sizes

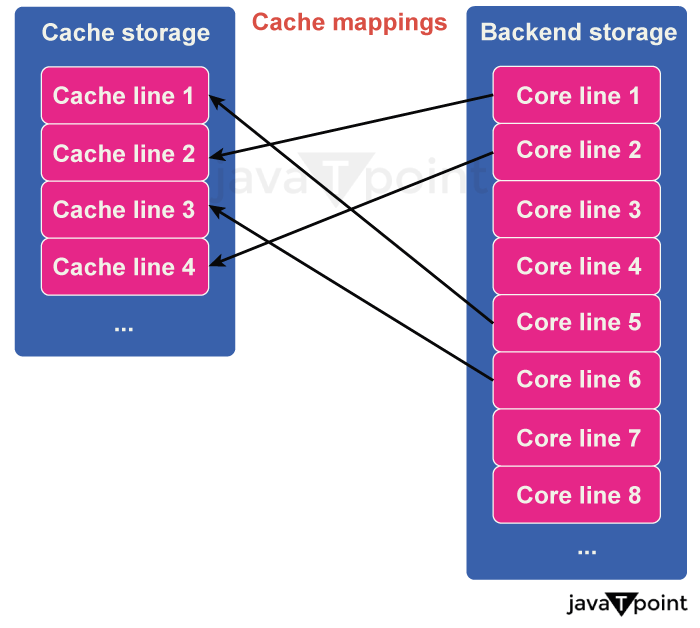

Diagram Shows a Relationship between Cache line and Core line.

This relationship is shown between the cache line and the core line, and here, every cache line is mapped with its associated core line, and this core line is the corresponding region of backend storage. Both Cache storage and the backend storage are divided into many blocks of the size of a cache line, and all the cache mapping is aligned to these blocks. The cache line contains information on the Core id, Core line number, and valid bits for every sector

Cache Line Bouncing:

Cache line bouncing is a situation that occurs when multiple processors access the same cache line simultaneously, which can cause performance degradation and synchronization issues.

In such cases, it is essential to implement proper synchronization mechanisms to ensure that only one processor accesses the shared data at a time. Case line bouncing is used by various protocols such as MESI(Modified-Exclusive-Shared-Invalid) or MOESI(Modified-Owner-Shared-Invalid). These protocols ensure that all processors consistently view the cache memory.

Cache line bouncing characteristics:

1- Synchronization issues

2- Coherence

3- Increased Bus traffic

4- Performance degradation

5- Cache Invalidation.

Example:

Let's take two processors, P1 and P2; each has its cache, and they both are trying to access the exact memory locations; let's take address 0x1000.

Initially, both processors' caches contain the cache line for address 0x1000. Firstly, processor P1 reads the memory location and then updates its cache line. This causes the cache line in P2's cache to be invalidated. And then P2 also wants to read the memory locations at address 1x1000, which was just invalidated; P2 needs to retrieve the updated cache line from the main memory. And P2 fetches the cache line; it is stored in other cache lines. This means that P2's cache line is different from P1's cache line.

If P1 wants to update the memory location again, it must first fetch the updated cache line main memory since its cache line is no longer up-to-date. So this causes P2's cache line to be invalidated again, and then the process repeats.

Therefore, these phenomena of constant invalidation and replacement of cache lines are known as cache line bouncing, and this causes poor performance and increased bus traffic. To avoid cache line bouncing, processors can use protocols, such as MOESI and MESI, to ensure that cache lines are kept in sync between many processors.

Cache Size

Cache size refers to the amount of memory allocated to a cache. It is a fast memory on the processor's chip that stores frequently accessed data and information. The size of the cache is mainly measured in kilobytes or megabytes.

Cache size has a crucial impact on system performance; larger cache sizes typically have higher cache hit rates and lower cache misses, which can significantly improve the system's performance. Cache size also plays a vital role in computer system performance. A larger cache can reduce the number of cache misses and increase the cache hit rate, increasing system performance.

Features of Cache Size

Cache size is an essential factor in determining the performance of a computer system.

1- Limited size: Cache size and the physical space available on the processor chip are limited. As the size of a cache increases, it takes up more space on the chip.

2-Measurement: Cache size is typically measured in bytes like kilobytes (KB) or megabytes (MB).

3-Replacement Policy: Various replacement policies are used in Cache memory, such as least recently used (LRU) and random replacement.

4-Performance: A larger cache size significantly impacts system performance, and a larger cache can reduce the number of cache misses and increase the cache hit rate, which helps in faster system performance.

5-Levels: The cache is divided into various levels, and each level with a different size and access time.