Congestion Control Techniques

What is Network Congestion?

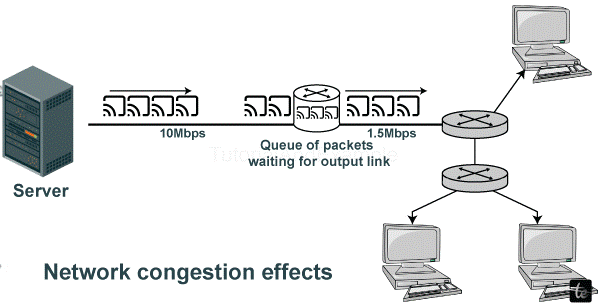

One of the common problems that affect data communications and networks is network congestion. This happens when the need for the communication space surpasses that which is readily accessible, leaving very little amount available, resulting in the slumping of speed at which data is transmitted. In other words, network congestion can be compared to car jams in the digital world.

Factors Contribute to Network Congestion

- Overutilization: The persistent heavy usage of network resources leads to network congestion. It can take place in common networks, servers, and even the Internet as a whole.

- Data Spikes: Such eventualities as flash sales, updating of software, and going viral with content can lead to an instant surge in traffic levels and subsequent congestion.

- Misconfiguration: Congestion may be because of poorly configured network gear and applications that need to be using what is available effectively.

The Importance of Congestion Control

Effective congestion control is of paramount importance for several reasons:

- Performance Optimization: Congestion reduces the performance of a network, causing slow data transmission, high packet drop rates, as well as delay times. As important as these sounds, they are vital in optimizing performance and providing a smooth customer experience at all times.

- Resource Allocation: Congestion control ensures fair distribution of network resources and discourages unnecessary traffic.

- Stability and Reliability: Network stability and reliability are also regulated by congestion control mechanisms. If left unchecked, congestion can destabilize the network, ultimately resulting in higher failure and downtime risks.

- Fairness: Fair sharing of network resources among users is facilitated through congestion control. In such a context, it restricts any user or application from damaging another party’s resources through unauthorized access.

- Economic Impact: This, therefore, leads to economic losses due to such congestion. Any delays in services or response will be expensive to the company because this implies that businesses will lose money. These economic losses can be relieved through congestion control methods.

Understanding Network Congestion

Information transmission and networking have experienced widespread congestion on the network. Such breakdown may hinder the flow of information, resulting in unsatisfactory consequences, among others. The second part is about how network congestion comes up and is likely to affect the performance of the network.

Causes and Origins of Congestion

- Overwhelming Data Demand: One of the leading causes of this problem is the high demand for data. In today’s digital world, the internet of Things (IoT), online streaming services, and cloud computing have highly elevated the requirement for quick data transfer. The increased demand may result in the congestion of network resources because they become overburdened.

- Network Oversubscription: Nonetheless, over sales of connection are common as most network providers assume that no users will simultaneously hit full capacity. Congestion results in an attempt by several users to demand their full allocated transmission capacity simultaneously.

- Misconfigured Equipment: Misconfiguration of network equipment such as routers or switches is responsible for the wasteful use of resources. Similarly, congestion can worsen because of improper routing processes and QoS specifications.

- Sudden Traffic Spikes: Such conditions may begin with some incidences, such as the introduction of viral materials, software upgrades, or flash sales. An unprepared network infrastructure may not handle this huge volume of information within a short period.

- Network Bottlenecks: Another cause of congestion is bottlenecking in the network. Such bottlenecks may result from old computers, the structure of a network, and incorrect routing.

Effects of Congestion on Network Performance

- Reduced Throughput: As a result, congestion causes data reduction in the network. Throughput is reduced. Therefore, data transfer speed becomes slow and latency high.

- Packet Loss: Packet loss is mainly due to network congestion. These packet losses can, in turn, affect the overall integrity of sent information, thereby requiring re-sending that can eventually add more traffic, hence worsening queuing.

- Increased Latency: Delay of data transfer results from congestion. High levels of latency may specifically affect real-time applications like video conferencing and online gaming.

- Degraded Quality of Service: Quality of service for applications or services with low tolerance to inconsistent performance in the network. These services will deliver poor voice quality in VoIP calls and may result in buffering while streaming.

- Resource Starvation: Some network users or applications may starve of resources due to congestion. This leads to unequal resource distribution, which can affect user experience.

- Risk of Network Failure: Excessive build-ups can eventually exhaust or even cause failures on a company’s network hardware, resulting in service outages.

Congestion Control Fundamentals

Everybody ought to understand the basics of how congestion is handled in the complicated sphere of network management. This section unravels two key concepts: the differentiation of flow control and congestion control, as well as the importance of congestion windows and throttling mechanisms.

Flow Control vs. Congestion Control

Two related but separate processes govern data flow through a network, which is known as Flow Control and Congestion Control. Understanding their differences is pivotal.

Flow Control

For the most part, flow control revolves around controlling the data exchange between a sending and receiving node in a network. This avoids a situation whereby a sender floods the recipient with data, causing data loss or inefficiencies as a result of buffer overflow.

- Local Operation: Flow control is executed at an individual level, dealing with the flow of information among two linked-up devices.

- End-to-End: It deals with the complete link of a message sent from sender to receiver.

- Dynamic Adaptation: Data is not lost nor delayed as flow control adapts the transmission rates according to the receiving capacity of its receiver.

Congestion Control

However, congestion control deals with data traffic-related issues throughout the network. The protocol controls the transmission of information to avoid congestion on the network, making sure that resources are evenly distributed and that the performance is good.

- Global Operation: On the other hand, congestion control is done on a global basis, covering the whole network, including its several parts.

- Network-Wide Impact: Network traffic is controlled as it transmits data between multiple devices and network sections to avoid jamming.

- Adaptive Behavior: It works through adaptive means that sense changes in the network state and changing traffic patterns with a view of keeping the system in an optimal operating mode.

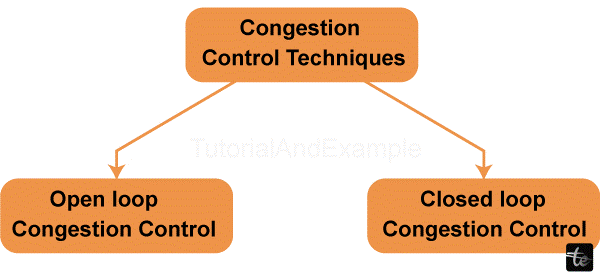

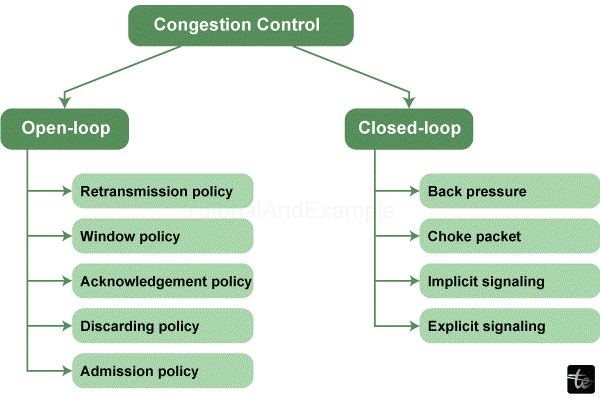

Congestion Control Techniques

Efficient management of network congestion is essential for perfect network operation. Congestion control techniques are critical in avoiding network clogging, assuring good information transfer, and maintaining the quality of service. In this section, we will explore two open-loop control strategies used to address congestion: Bandwidth Reservations and Traffic Shaping.

Open-Loop Control Strategies

Open-loop control is a preemptive action taken in case of network congestion problems. These comprise planning that is quite intricate, as well as the assignment of resources to be used more effectively.

Bandwidth Reservations

Bandwidth reservation refers to specifically allocating a certain portion of network bandwidth to a given application or service. It is highly suitable for mission-critical applications with guaranteed minimum bandwidth, such as real-time video conferencing or VoIP calls.

Working

Some part of the available bandwidth is given to an individual application by the network administrator. The reservation of the bandwidth allows the application to work without disturbance when there are high traffic times in the network.

Benefits

- Guaranteed Performance: Bandwidth reservation ensures that these critical applications never suffer degradation of performance with performance dropping to a threshold level or beyond.

- Prioritization: With regard to resource allocation, critical service takes precedence whenever it is required.

Traffic Shaping

One technique among these others is called traffic packet shaping; implying elimination from network jams the burst of information causing traffic spikes in order to enhance its distribution. They control their associated line segments congestion by limiting transmission rates to levels that are not exceeding network capacity.

Working

In other words, traffic shaping buffers outgoing data and adjusts the output rate to match the network’s capacity. The road reduces sharp peaks in traffic flow that may cause jamming.

Benefits

- Efficient Use of Resources: This maximizes resource usage within a limited network and reduces the chance of network jamming.

- Fairness: Fairness is achieved as no single user and application uses all the network resources since they are shared out among the users.

Closed-Loop Control Strategies

The congestion control network is a key element which comprises of dynamic adapting closed loop strategies for instantaneous changes in the network. In this section, we delve into two prominent closed-loop control strategies: The implementation of a mechanism that manages active queue with an emphasis on TCP.

TCP Congestion Control

Transmission control protocol (TCP) is one of the widely known transport layer protocol. An exemplary closed-loop feedback mechanism designed for managing data transmission within the network based on the present state of the network’s congestion is TCP Congestion Control.

Working

The techniques employed by TCP include the sliding window and congestion window, amongst some. When TCP detects congestion, it makes the dynamic send rate lower to avoid packet losses and to limit network congestion.

Benefits

- Adaptability: Consequently, it is able to function in various network conditions.

- Fairness: The fairness principle ensures that network resources are shared out among many users and applications.

AQM (Active Queue Management)

Active Queue Management (AQM) constitute of routers that use a shut down loop mechanism with regard to traffic control. It enables congestion control by constantly monitoring and fine-tuning the sizes of the queue into shorter sections that don’t create a significant amount of traffic buildup within the network.

Working

It drops or marks the packet when these routers see that there is a lot of traffic resulting in congestion. Doing this helps maintain short queues, thereby reducing delays and packet loss.

Benefits

- Reduced Packet Loss: It pre-alerts the senders to reduce their transmission speeds whenever their queues are full for they avoid losses in queuing jams.

- Low Latency: However, it is important in reducing queues as well as packet queuing delay, popularly known as the network latency.

Load Balancing Techniques

Load balancing is an important aspect of managing networks and servers, which distributes traffic load equally and averts saturation of particular servers or network segments. In this section, we explore two essential load-balancing techniques: WFQ, and round robin scheduling.

Round Robin

Among the popular and easy examples of load balancing technique, there is Round Robin. In essence, it disperses incoming network traffic across server farms and throughout its system components via round robin method. To this end, equal distribution of resources to the entire servers prevents instances when some servers become overwhelmed while others remain unused due to insufficient resource utilization.

Working

This is how each server does its work, that is, in the order in which the subsequent requests arrive at it. However, upon getting another directive for the entire servers she terminates, but equally distributes the task.

Benefits

- Simplicity: The round-robin method of load balancing is easy to run administration and deploy.

- Fairness: It also ensures even distribution of resources, minimizes server overload, and improves efficiency.

Weighted Fair Queuing

WFQ is a more advanced traffic control technique in which the load is shared between every server, as well as network resources, according to their power and ability. This is where it assigns a “weight” to each respective resource based on capacity and uses an intelligent load balancer that sends traffic appropriately to the correct resource according to these weights.

Working

The incoming traffic is allocated proportionally to resources that have higher weights. This method maximizes resource efficiency and averts low-capacity servers being left idle.

Benefits

- Efficiency: Through this, WFQ ensures that the network resources use is optimized as it directs more traffic towards high-capacity resources, thus optimizing the resources.

- Performance Optimization: It improves system efficiency by routing requests toward the best-equipped servers, thereby decreasing the response time duration.

Congestion Control as a QoS measure

A fundamental principle of network management that assists in congestion control is called Quality of Service (QoS). It lets to give preference in transmissions, so, for example, the network administrator ensures that some important apps get the required resources and performance. In this section, we explore QoS in the context of prioritizing traffic and two key QoS models: Differentiated Services (DS) and Integrated Services (IS).

Prioritizing Traffic

Traffic prioritization is the process of ranking network data in order of their priority such that critical information gets better treatment even during network congestions compared to routine data.

Working

Different classes of traffic are given various degrees of priority by network devices. For instance, it assigns a high priority to real-time services like VoIP or video conferencing in order to minimize delay. In contrast, noncritical data such as e-mails are categorized under low priority.

Benefits

- Optimized Performance: In busy networks, traffic is prioritized such that key applications still function optimally.

- Enhanced User Experience: Real-time users have lower latency and, therefore, better services.

Differentiated Services (DiffServ) and Integrated Services (IntServ)

Two models for implementing QoS are DiffServ and IntServ:

- DiffServ (Differentiated Services): This makes DiffServ a scalable and simple QoS model that classifies and marks packets with a Differentiated Services Code Point (DSCP) contained within the packet headers, making it easy for the routers to make forwarding decisions based on these markings.

- IntServ (Integrated Services): The end-to-end reservation of network resources for every flow, or the IntServ QoS model, uses more detailed signaling protocols like RSVP or Resource Reservation Protocol.

Benefits

Diffserv is better suited for large-scale networks, including the Internet, as it requires lesser signaling and resource reservation. On the other hand, intserv works for small-size networks with the need for finer granularity of resource control.

Modern Approaches and Protocols

The advent of networking and congestion control has witnessed new approaches and protocols addressing the contemporary needs of the transmission of data. This section explores three key modern developments: Software-defined networking, congestion control in cloud environments, and QUIC.

Software-Defined Networking (SDN)

SDN represents a new concept of software used in networking technology to help manage networks and avoid congestion. Unlike conventional network architecture, SDN decouples the control plane from the data plane, thereby enabling coordinated control and flexible networking.

Working

SDN separates the control plane that is used for specifying network policies from the data plane that handles data forwarding. In essence, a centralized SDN controller provides the dynamic configuration of network devices to suit changing traffic patterns and congestion, resulting in efficient utilization of resources.

Benefits

- Dynamic Adaptation: SDN allows for dynamic reconfiguration of network plans in order to handle congestions as they happen.

- Efficiency: This translates into increased control of resources, which goes a long way in enhancing network performance while reducing cases of network congestion within the organization.

Congestion Control in Cloud Environments

In recent times, when companies are moving their systems into the cloud, there must be effective traffic control for Cloud environments. Some solutions have been presented by cloud-based providers and users in order to resolve these issues.

Working

Dynamically providing resources in a virtualized environment based on cloud-based congestion control using SDN concepts. Prioritizing traffic, managing virtualized network segments, and application of congestion control algorithms.

Benefits

- Scalability: They assist in avoiding congestion that would occur due to high traffic through cloud-based environments that can dynamically scale resources as required.

- Resource Allocation: Congestion controlling efficiently within cloud surroundings means every single application will have its fair share of the resources, thereby making an app run effectively.

QUIC (Quick UDP Internet Connections)

Google designed it as an innovative transport protocol for web speedup. This gives users a faster, more reliable, and better internet experience since it combines UDP and TCP.

Working

QUIC improves flow control as it reduces the number of initial handshakes and makes use of a faster transfer procedure. The system uses end-to-end encryption that ensures low round trip time, thereby leading to reduced latency. QUIC also uses multiplexing and packet timing for enhanced efficiency.

Benefits

- Faster Data Transfer: The connection establishment time is minimized on QUAC protocol, which makes it suitable for low-latency applications.

- Improved Congestion Handling: Some of the innovative features of QUAC are helpful for congestion control, allowing effective data transfer through saturated networks.

Application of Congestion Control in the Real World

It goes beyond being just a theoretical consideration it has real bearings in handling various problems related to cross-scenario network interrelatedness. Here are some notable examples of congestion control implementation:

Content Delivery Networks (CDNs)

For example, CDNs like Akamai and Cloudflare use their congestion control schemes to ensure efficient delivery of content. While on it, nevertheless, the content remains shared in different servers scattered and strategically placed around the globe to overcome such obstacles to the global dissemination of information.

Video Streaming Services

Some Netflix and YouTube users can continue to watch a specific show uninterrupted because the service providers have congestion solutions. The referred services adapt their playback quality according to the state of the network and are non-buffering and non-stuttering.

Online Gaming

Such as low latency and delay-free networks support seamless game play experiences in different online games. Traffic control of games is designed such that it permits developers and platform designers to prioritize gaming-related traffic, which will ensure those playing online, get uninterrupted entertainment irrespective of the level of congestion on the internet and network.

Voice over IP (VoIP)

Some popular VoIP systems, such as Skype and Zoom, keep quality voice and video by incorporating a congestion control system. It is responsive and alterable, so as to minimize interference and delay as much as possible, but this does not involve serious disturbance of the speech reception thresholds.

Data Centers

The congestion control mechanism utilized in data centers includes that of firms led by tech giants like AWS and Google Cloud. The data centers handle a lot of information, making it necessary for good congestion control that minimizes the chances of blockage or shutdown caused by traffic jams.

Mobile Networks

Congestion control strategies employed by mobile network providers help them effectively handle data traffic. Using tactics such as load balancing and QoS can allow for a network to be dedicated equally to the multiple types of communication traffic it could handle, including phone calls and videos.

Conclusion

In Conclusion, congestion control techniques an integral part of network operation, which is continuously updated. It is important in the smooth operations, dependability, as well as being of equal opportunity for all users of data traffic, leading to the best online experience. The network world has changed from the conventional means of open-loop and closed-loop control options to current innovations of SDN and QUIC in order to cope with the increasing data transmission requirements for communication services.