Speech Recognition in Python

What is Speech Recognition?

Speech Recognition is a term defined for automatic recognition of human speech. Speech recognition is the most significant activity in the domain of the interaction between the human and computer. In order to experience the power of speech recognition, most of us may have come through such Artificial Intelligence like Alexa, Siri, or Google Assistance.

Speech recognition has multiple applications starting from automatic speech data transcriptions (for example, in voicemails) to interact with robots through speech.

In the following tutorial, we will learn a lot more about speech recognition and developing a simple speech recognition application capable of recognizing speeches from audio files.

But before we start, let us understand what we meant by Speech and the kind of tasks that a Speech processing system performs.

Let us begin by defining Speech. Speech is one of the most fundamental ways of communication in humans. The fundamental idea behind speech processing is to offer an interaction between a human and a computer/machine.

There are mainly three tasks or activities that a Speech processing system performs. These tasks are:

- At first, speech recognition helps the computer/machine in catching the words, phrases and even sentences that we say.

- Secondly, natural language processing helps the computer/machine in understanding what we say.

- At last, speech synthesis helps the computer/machine in speaking.

Now, let’s head towards the main concept of speech recognition, the process of empathizing with the words, phrases, and sentences spoken by human beings. There are multiple libraries available in Python for speech recognition. However, we will be using the simplest of all these libraries, known as the SpeechRecognition library.

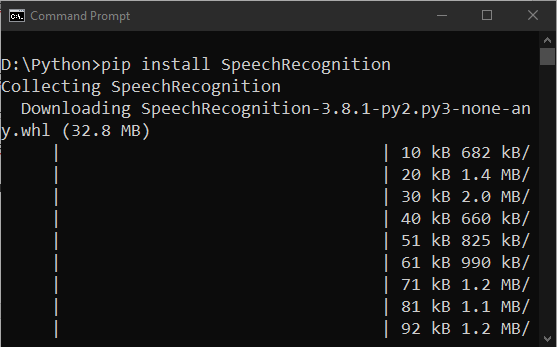

Installation of SpeechRecognition Library

In order to install the SpeechRecognition Library, we have to execute the following command in a command shell or terminal:

$ pip install SpeechRecognition

Speech Recognition from Audio Files

Since we have installed the SpeechRecognition library, let us start by translating speech from an audio file to text. We will be using an audio file that can be downloaded from this link. Please download this audio file to the local file system, or someone can use his/her own.

Now, as the first step, we have to import the libraries required for the project. We will be importing the speech_recognition library only that we have installed earlier for this project.

import speech_recognition as speech_rcgn

We have to use the Recognizer class available in the speech_recognition module to translate the speech into text. The Recognizer class provides the developers various methods depending upon the underlying API utilized in order to translate speech to text. These methods are listed below:

| S. No. | Methods | Description |

| 1 | recognize_google() | This method utilizes Google Speech API. |

| 2 | recognize_google_cloud() | This method utilizes Google Cloud Speech API. |

| 3 | recognize_bing() | This method utilizes Microsoft Bing Speech API. |

| 4 | recognize_ibm() | This method utilizes IBM Speech to Text API. |

| 5 | recognize_houndify() | This method utilizes Houndify API by SoundHound. |

| 6 | recognize_sphinx() | This method utilizes PocketSphinx API. |

Among all the methods stated above, we can use the recognize_sphinx() method for converting speech to text offline.

We will create an object of the AudioFile class provided by the speech_recognition module in order to recognize the speech from an audio file. The audio file path that we wanted to convert from speech to text will be passed to the AudioFile class constructor. The executing of the same can be seen in the script shown below:

sample = speech_rcgn.AudioFile('D:/Python/my_audio_f.wav')

In the above snippet of code, we can update the audio file path that we wanted to transcribe.

Now, we will use the recognize_google() method for the transcription of the audio files. However, the recognize_google() method needs a parameter as the AudioData object available in the speech_recognition module. We can use the record() method available in the Recognizer class in order to translate the audio file to an audio data object. The same can be done by passing the AudioFile object to the record() method. The execution for the same can be seen in the script shown below:

with sample as audiofile: audiocontent = rcgn.record(audiofile)

Now in order to check the type of the audiocontent variable, we have to use the type() method, as shown below:

print(type(audiocontent))

Output:

<class 'speech_recognition.AudioData'>

Once we are done checking the type of the audiocontent object, we can pass this object to the recognize_google() method available in the Recognizer() class and check if the audio sample is converted into text or not.

The execution for the whole program can be seen in the example shown below:

Example:

import speech_recognition as speech_rcgn

rec = speech_rcgn.Recognizer()

sample = speech_rcgn.AudioFile('D:/Python/my_audio_f.wav')

with sample as audiofile:

audiocontent = rec.record(audiofile)

print(type(audiocontent))

print(rec.recognize_google(audiocontent))

Output:

<class 'speech_recognition.AudioData'> perhaps this is what is PR agency is are their dignity schedule III was much is 50 feet then the choreographer missed arbitrated never go back into acquiescence with things as they find it in misery and isolation around us in this instance such personal purchase for a luxury cases of severe and advisement say he is a horse days Ranjan or he may have a point that contains between fuel prices straight line which symbolises uniqueness the circuit universality of small hole in wall with client has more subtle implications in passport after expiry marketing program manufacturers taking initiative of the costs involved cricket overlapping twisted widely spaced to you always navigate like this

In the above example, we have imported the speech_recognition module, and we have then instantiated the Recognizer class of the speech_recognition module. We have used the AudioFile method to specify the path of the Audio File. We have used the record() method to convert the speech to text. We have also used the recognizer_Google() method for using the Google API for translation. And we can observe the output shown above; the audio has been successfully converted into text. We can also observe that the audio file has not been transcribed 100% correctly, yet the accuracy is quite reasonable.

Setting Up Duration and Offset Values

Python speech_recognition module also allows developers to transcribe the specific segment of the audio file instead of transcribing the whole speech. For example, suppose we want to transcribe only the first 15 seconds of the audio sample. In that case, we need to pass 15 as the value for the parameter called duration available in the record() method.

Now let’s have a look at the example shown below:

Example:

import speech_recognition as speech_rcgn

rec = speech_rcgn.Recognizer()

sample = speech_rcgn.AudioFile('D:/Python/my_audio_f.wav')

with sample as audiofile:

audiocontent = rec.record(audiofile, duration = 15)

print(rec.recognize_google(audiocontent))

Output:

perhaps this is what is the average in his are their dignity schedule III was much is 50 feet then the choreographer missed arbitrated never go back into acquiescence with things as they work finds it in history and displays

In the above example, we have included the duration parameter in the record() method with a value = 15. Thus, as an output, we are getting the transcription for the audio sample up to 15 seconds.

Similarly, we can skip some parts of the audio file from the starting with the help of the offset parameter. The offset parameter is another attribute for the record() method that helps in cropping the audio file from the beginning. For example, if we do not want to transcribe the first 5 seconds of the audio, we have to pass 5 as the offset attribute value. As a result, the text conversion for the first 5 seconds will be skipped, and the transcribes the rest of the audio file.

Let us consider the following example for the same:

Example:

import speech_recognition as speech_rcgn

rec = speech_rcgn.Recognizer()

sample = speech_rcgn.AudioFile('D:/Python/my_audio_f.wav')

with sample as audiofile:

audiocontent = rec.record(audiofile, offset = 5, duration = 15)

print(rec.recognize_google(audiocontent))

Output:

10 matches 50 feet in a choreographer missed arbitrated never settle back into acquiescence with things as they work finds it in an industry and isolation Raunak in this instance such personal purchase for a luxury

In the above example, we have included the offset parameter with the value = 5 in the record() method. Thus, as an output, we are getting a transcription that has skipped the first 5 seconds of the audio file and then transcribes the audio for 15 seconds.

How to handle Noise?

Due to various reasons, an audio file can consist of Noise. And this Noise can affect the overall transcription quality. In order to reduce the Noise, the Recognizer class provides the method called adjust_for_ambient_noise(), which takes the AudioData object as an argument.

Let us consider the following example demonstrating the improvement of transcription quality by removing noise from the audio:

Example:

import speech_recognition as speech_rcgn

rec = speech_rcgn.Recognizer()

sample = speech_rcgn.AudioFile('D:/Python/my_audio_f.wav')

with sample as audiofile:

rec.adjust_for_ambient_noise(audiofile)

audiocontent = rec.record(audiofile)

print(rec.recognize_google(audiocontent))

Output:

Kesariya pareshani is are their dignity have you thought it was much is 50 feet then the choreographer missed arbitrated never go back into acquiescence with things as they work finds it in misery and isolation around us in this instance such personal purchase for a luxury cases of severe and advisement say he is a horse days Ranjan or he may have a point that contains between fuel prices straight line which symbolises uniqueness the circuit universality of small hole in wall with client has more subtle implications in passport after expiry marketing program manufacturers take an initiative of the costs involved cricket overlapping twisted widely spaced to you always navigate like this

In the above example, we have included the adjust_for_ambient_noise() method in order to reduce noise in the audio file. As a result, the script has handled the noise in the audio file and transcript the speech into text and print it for us. Moreover, the output can be quite similar to what we have got in the first example as the audio file has less noise already.

Conclusion

Speech recognition has numerous applications useful in the domain of human machine interaction and, most importantly, automatic speech transcription. Using multiple libraries and methods, such as PyAudio, SpeechRecognition and Google-Speech-API, we can easily build a Speech-to-Text application like Alexa, Siri and Google Assistant, which not only transcribes the audio file but also helps in transcribing live from a microphone. Moreover, we can also use methods like adjust_for_ambient_noise() in order to handle noise in the audio file.